Autonomous Driving Technology for Connected Cars

Autonomous driving is recognized as an important technology for dealing with emerging societal problems. Hitachi is accelerating the pace of technology development with the aim of extending use of the technology from highways to ordinary roads. This article describes examples of work on dynamic maps, model predictive control, and an AI implementation technique, and also the prospects for the future.

With reducing traffic accidents and the associated deaths and injuries being one of the major challenges facing the automotive sector, there is considerable activity in the research and development of technology for preventive safety to assist driving and for autonomous driving to replace the functions of the human driver. Both sensing techniques for determining what is happening around the vehicle and recognition and decision-making techniques for safely driving the vehicle through the detected environment are important for achieving high levels of driving assistance and autonomous driving. If artificial intelligence (AI) is then added to this mix, it opens up the potential for driving in more complex environments. This article describes work being done by Hitachi on technologies for recognition and decision-making and for the application of AI to support more advanced autonomous driving.

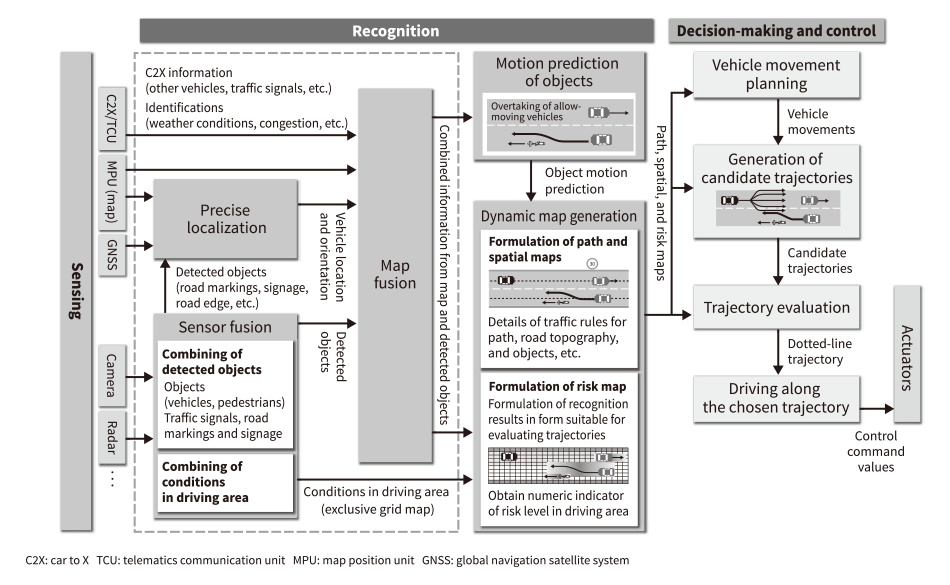

Autonomous driving is implemented using sensing, recognition, decision-making, and control (see Figure 1). Sensing means the use of stereo cameras, radar, or other sensors to detect objects. Recognition is made up of “sensor fusion,” meaning the combining of sensing information from the various sensors (vehicles, pedestrians, signage, road markings, and so on); the precise identification of vehicle location; “map fusion,” meaning the merging with the map of objects identified by sensing; “motion prediction of objects,” meaning predicting the behavior of objects around the vehicle; and “dynamic map generation,” meaning the creation of path, spatial, and risk maps to express this information as data in a form that can be used for decision-making and control.

Decision-making and control, in turn, is made up of vehicle movement planning, the generation of candidate trajectories, trajectory evaluation, and driving the vehicle along the chosen trajectory. Vehicle movement planning manages the status of all aspects of autonomous driving and generates the overall vehicle movement at the lane level (such as choosing which lane to drive in). The generation of candidate trajectories is performed based on factors such as the dynamic characteristics of the vehicle. Trajectory evaluation takes account of upcoming risks to choose the best of the candidate trajectories. Driving the vehicle along the chosen trajectory is done by calculating the control command values to send to the actuators.

Figure 1 - Block Diagram of Autonomous Driving System Autonomous driving is implemented using sensing, recognition, decision-making, and control.

Autonomous driving is implemented using sensing, recognition, decision-making, and control.

As extending the use of autonomous driving to include ordinary roads in the future and reaching level-4 or level-5 automation will involve dealing with situations that are difficult to handle using conventional rule-based control, the adoption of new intelligent technologies such as deep learning or model predictive control will be needed. Technical innovation over recent years has made possible better-than-human levels of sensing and identification in image processing applications using deep learning, and also the ability to predict the movement of nearby vehicles and other objects. It has also become possible to generate more appropriate vehicle paths than can be obtained by rule-based designs, including by using model predictive control to take account of the predicted movements of surrounding objects when generating paths.

While these calculations tend to impose a heavier computational load than past methods, embedded system devices capable of such computation have become available and are starting to be installed on vehicles equipped for autonomous driving. However, the large number of different deep learning algorithms that exist means that appropriate methods need to be chosen and the computational load reduced before this technology can be put into practical use. Although still at the research and development phase, it is anticipated that this technology will be crucial to autonomous driving.

Vehicles equipped for autonomous driving need to recognize with high accuracy what is happening in the driving environment (other vehicles, intersections and so on) based on data from sensors and maps, and to pass this information to the autonomous decision-making (control) functions with a certain data representation. A typical example of the data representation already in use is the Advanced Driver Assistance Systems Interface Specification (ADASIS), an industry standard interface to provide static digital maps for advanced driver assistance systems (ADASs). This standard has been applied to the development of longitudinal speed control techniques, such as adaptive cruise control (ACC). It provides a way of representing relative position in information about the surrounding environment along the road (vehicle path). However, at this moment, it is not fit for autonomous driving including lateral driving control (i.e., steering control), because it has yet to be applied to representing detailed topography at the level of lanes. Moreover, since the lane-level detailed representation leads to larger data size and complexity in use, ways of representing this data efficiently and easily will be needed to enable control by electronic control units (ECUs) with limited computing and memory capacity.

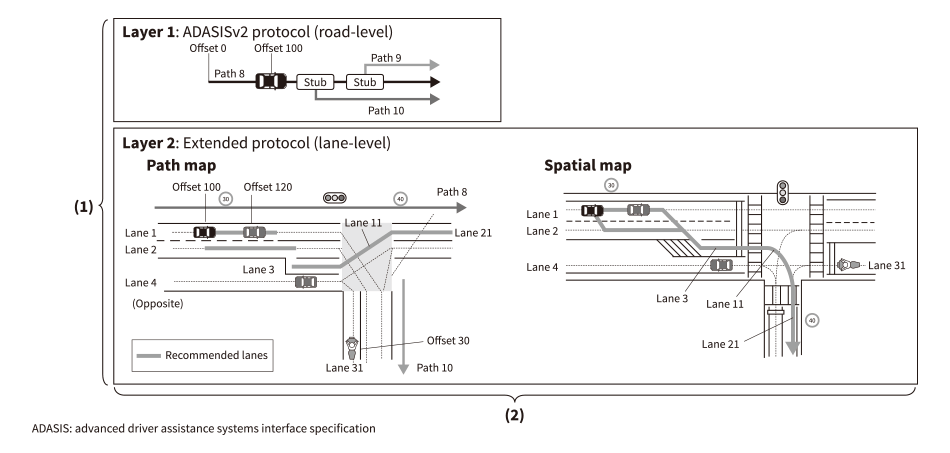

Accordingly, Hitachi has developed a hierarchical hybrid data representation method to efficiently and flexibly provide the detailed lane-level information about the surrounding environment that is needed for autonomous driving. The method has the following two main features (see Figure 2).

Layer 1 of the two-layered structure uses the ADASIS protocol that is already widely deployed in actual products, while layer 2 provides the additional representation required for autonomous driving. This provides support for autonomous driving while still preserving compatibility with existing products using the ADASIS protocol. For the two different coordinate systems, the method for representing information relative to the vehicle path provides a quick way to assess the surrounding environment at the macroscopic level, while the relative spatial coordinates enable a precise microscopic assessment. The ability to choose between the two different ways of representing information as needed facilitates the flexible development of diverse ADAS applications that include autonomous driving.

In terms of how the two coordinate systems are used, whereas the coordinate relative to the vehicle path, mainly used for longitudinal (long-term) control, requires the provision of information over a wider area in the order of kilometers, the relative spatial coordinate requires at most several hundred meters or so considering the high precision required for lateral control. With this property in terms of requirements, and by limiting the scope of information provided using relative spatial coordinates to just the immediate vicinity, the amount of data provided to the decision-making (control) functions of autonomous driving can be considerably reduced.

Figure 2 - Structure of Dynamic Map (Extension of ADASISv2 Protocol) A two-tier structure is used that is split between an abstracted representation at the road level (layer 1) and a detailed representation at the lane level (layer 2) (1). Similarly, two different coordinate systems are used to represent information about the surrounding environment using, respectively, coordinates relative to the vehicle path and relative spatial coordinates (2).

A two-tier structure is used that is split between an abstracted representation at the road level (layer 1) and a detailed representation at the lane level (layer 2) (1). Similarly, two different coordinate systems are used to represent information about the surrounding environment using, respectively, coordinates relative to the vehicle path and relative spatial coordinates (2).

Expanding the use of autonomous driving from highways to ordinary roads will require advanced recognition and decision-making techniques that include not only the sensing of pedestrians, vehicles, and other objects, but also the ability to predict their movements. It will also require the ability to take account of these movement predictions when making turns at intersections so as to select a path and speed that are both safe and comfortable.

Past methods have mainly involved the development of rule-based algorithms that itemize the potential movements of nearby objects based on what is happening around the vehicle and then drive the vehicle in such a way that it can cope with these possibilities. Unfortunately, because the number of combinations of potential movements by objects when driving on ordinary roads is so large, designing in the ability to cover all of these without any omissions in impractical. Instead, Hitachi has been looking at using AI to enable autonomous driving in complex environments.

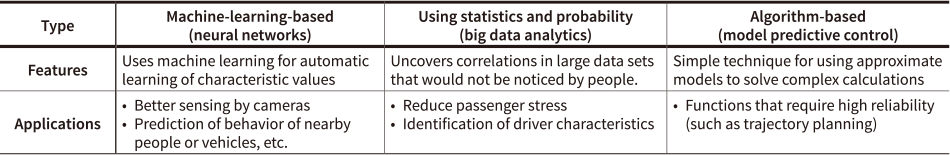

AI can be broadly divided into three different techniques, and Hitachi is investigating the best ways of using each of these (see Table 1).

The first is the neural network. Hitachi is investigating techniques for detecting nearby objects from camera video with greater precision, and for performing learning on the movements of other nearby vehicles and pedestrians in order to predict their future movements. In parallel with this study of algorithms, other work is aimed at simplifying (pruning) the resulting networks. This is explained further in the following section.

The second technique is big data analytics. Hitachi has developed its own AI called Hitachi AI Technology/H (AT/H). AT/H can automatically identify the elements that correlate strongly with key performance indicators (KPIs) in large and complex data sets. One example is a study that is using AT/H to analyze the movements of a vehicle under manual control and the surrounding conditions so that the findings can be incorporated into vehicle control in order to achieve reliable and comfortable autonomous driving that is closer to that of a human driver.

The third form of AI is model predictive control. This is described in more detail below.

Table1 - Uses for AI Targeted by Hitachi AI can be broadly divided into three types and Hitachi is investigating the best uses for each.

AI can be broadly divided into three types and Hitachi is investigating the best uses for each.

Figure 3 - Neural Network Pruning Technique The technique reduces the computational load while maintaining sensing accuracy by omitting calculations in which the weighting coefficient is small.

The technique reduces the computational load while maintaining sensing accuracy by omitting calculations in which the weighting coefficient is small.

Figure 3 (a) shows a simple representation of how a node works. As shown in the figure, the input signals (X1, X2, and X3) are multiplied by their weighting coefficients (W1, W2, and W3) and the sum of the results is output. Figure 3 (b) shows an example of a three-layer neural network using these nodes. The neural network requires a large number of multiplications and additions for each node, making it difficult to implement in real time on an ECU with limited computing and memory capacity.

To overcome these problems, Hitachi has been investigating a pruning technique that reduces the computational load while keeping the impact on sensing accuracy to a minimum by omitting calculations in which the weighting coefficient is small. Figure 3 (c) shows an example of a pruned network. This provides an efficient way to implement large neural networks on ECUs when they are needed for autonomous driving with high precision.

Model predictive control predicts the control output x for a control input u, and searches for the control input u that minimizes a cost function H representing control performance within a fixed time (t), treating it as an optimization problem. Figure 4 shows an example of model predictive control used to generate vehicle trajectories. It is made up of the generation of candidate trajectories and the cost function calculation.

The generation of candidate trajectories searches for the optimal trajectory using an optimization solver that works by testing the vehicle trajectories output by the cost function calculation. Optimization solvers can be broadly divided into iterative methods that use the derivative of the cost function, and heuristic methods that search for the solution directly using trial and error. While iterative methods impose less of a computational load, they risk getting stuck on a local solution that is not the best possible. Heuristic methods, in contrast, although able to search for the optimal solution over a wide range, impose a heavy computational load because they work by trial and error. Fortunately, advances in computers over recent years have opened up the possibility that the calculations can be performed fast enough for use in real-time control. Accordingly, Hitachi undertook a comparison of various heuristic methods (genetic algorithms, particle swarm optimization, and the artificial bee colony algorithm) for use in this way, selecting the artificial bee colony algorithm on the basis of its ability to handle a large number of variables (scalability), ability to avoid local solutions, execution speed, and ease of parallel implementation.

The cost function H calculates the suitability of each trajectory, using the candidate future vehicle locations x(k) (k=0, 2, … n) output by the generation of candidate trajectories as its inputs. The cost function H is made up of two terms: H1 indicating the likelihood of a collision with a moving object and H2 indicating the level of ride comfort. The collision likelihood H1 is obtained by integrating the risk map output by the function for motion prediction of objects over the region S occupied by the vehicle. As the level of ride comfort is deemed to be better the lower the vehicle acceleration or rate of change of acceleration, H2 is calculated by integrating the squares of these two parameters over time. In this way, the calculation is able to determine a trajectory for the vehicle through a complex environment encompassing a number of moving objects that avoids collisions and maintains ride comfort.

Figure 4 - Generation of Trajectories Using Model Predictive Control The generation of trajectories using model predictive control includes both generating candidate vehicle trajectories and calculating a cost function.

The generation of trajectories using model predictive control includes both generating candidate vehicle trajectories and calculating a cost function.

Along with more advanced autonomous driving and driving assistance and broadening their scope of application, the systems used for driving also require a high level of safety. Together with evaluation and testing techniques, Hitachi intends to continue working toward the early implementation of autonomous driving systems that can help overcome societal challenges by developing the recognition, decision-making techniques, and intelligent technologies described in this article.