The governance and ethics of AI are key issues for Hitachi, which recognizes the significant societal impact associated with the use of this technology across its extensive business domains. Many different aspects of AI ethics have already been highlighted, among them safety, privacy, fairness, transparency and accountability, and security. Meanwhile, not only the principles, but also their practical implementation are rapidly becoming an area of significant activity, examples of which include the publication of draft EU regulations on AI and METI’s AI Governance Guidelines. Hitachi published its own “Principles guiding the ethical use of AI in Social Innovation Business” in February 2021. Hitachi is also using these principles as a basis for the practical implementation of initiatives to establish governance across all steps including research, PoC, product development, and maintenance. This article describes the ideas that underpin Hitachi’s AI Ethical Principles and gives an overview of their practical implementation.

Artificial intelligence (AI) is becoming increasingly important as a source of innovation, being adopted in a wide range of fields including railways, energy, healthcare, manufacturing, and finance. Meanwhile, the challenges facing society are becoming severe and complex, including environmental problems, how to improve resilience, and how to enhance people’s quality of life (QoL) at a time when their values are becoming ever more diverse. AI is expected to play a significant role in solving these societal challenges and will be used across widespread areas of society in the future.

With the launch of Lumada, Hitachi is accelerating the use of digital technologies in its Social Innovation Business. In Lumada, AI is an essential driving force behind the creation of new value. Hitachi’s Social Innovation Business often deals with social infrastructure or services that have a strong public interest component, and the impact of AI on society will be considerable. If society is to be both fair and trustworthy, it will require that AI be deployed, implemented, and operated appropriately based on an accurate understanding of its behavior and the associated risks.

Hitachi published its “Principles guiding the ethical use of AI in Social Innovation Business” (hereafter “AI Ethical Principles”) in February 2021 as a corporate policy for development and social implementation of AI(1). This was also accompanied by the publication of a white paper describing Hitachi’s initiatives in the area of AI ethics. These set out the standards of conduct and items to be addressed that are unique to Hitachi in the operation of its Social Innovation Business.

Hitachi has since its founding adopted a human-centric perspective. At Hitachi Mine, for example, the company’s birthplace, a 156-m-high chimney was constructed for the smelter in 1915 to alleviate smoke pollution. At the time, it was the world’s tallest chimney. Observation points were also built in the nearby hills. The outcome of this was a management system that linked the state of the atmosphere with smelter production while also being open to the public. Hitachi was also among the first to incorporate ethics into its medical and neuroscientific research, becoming the first medical equipment manufacturer to establish an ethical review committee in 2000. Likewise with its consideration for privacy protection in the use of data, Hitachi in July 2014 appointed a personal data manager and set up a privacy protection advisory committee.

Hitachi’s corporate mission is to “contribute to society through the development of superior, original technology and products,” and as such ethics is deeply embedded in its culture, providing a steadfast underpinning to its development of technologies and products across all fields, not just AI. Hitachi’s AI Ethical Principles are an expression of this in the context of AI and will serve to unify understanding of this topic across all staff and to guide the establishment of in-house governance systems.

The next section describes Hitachi’s AI Ethical Principles and the ideas that underpin them. This is followed by a section explaining how they will be put into practice.

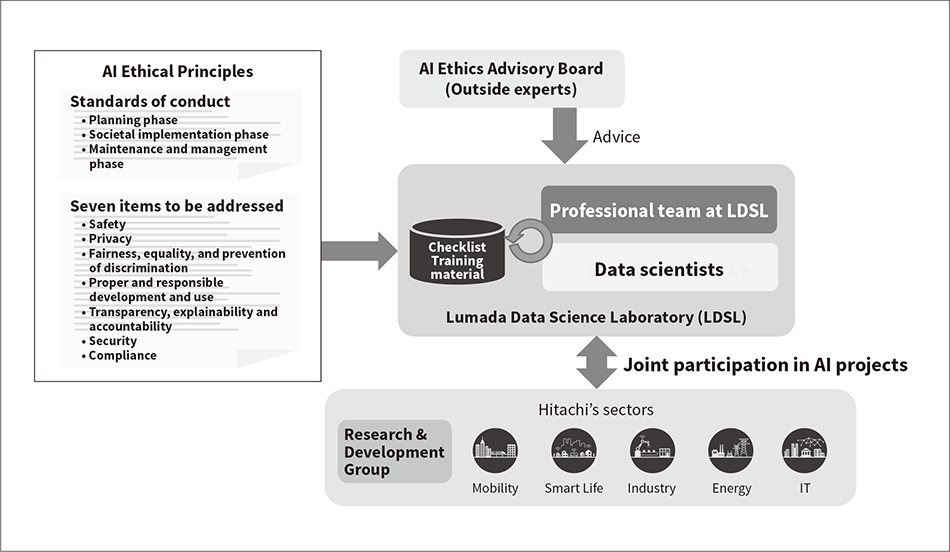

Figure 1 — Overview of Hitachi’s Approach to AI Ethics Hitachi’s AI Ethical Principles stipulate standards of conduct across the “planning,” “societal implementation,” and “maintenance and management” phases of AI, and also seven “items to be addressed” that apply to all three phases.

Hitachi’s AI Ethical Principles stipulate standards of conduct across the “planning,” “societal implementation,” and “maintenance and management” phases of AI, and also seven “items to be addressed” that apply to all three phases.

The Principles of Human-centric AI Society was published in Japan in March 2019. Internationally, the Organisation for Economic Co-operation and Development (OECD) adopted its Principles on Artificial Intelligence in May 2019 and the G20 adopted its own AI principles that draw on the OECD principles in June 2019. Various such national and corporate initiatives are also summarized in the Report 2021 of the Conference toward AI Network Society(2). The problem of AI ethics has global recognition and the issues are being clarified through international debate that incorporates a diversity of viewpoints.

Hitachi, meanwhile, has formulated its own AI Ethical Principles that are informed by this international debate while also taking account of its corporate culture and the nature of its business that deals in social innovation. The principles identify standards of conduct in the three phases, the “planning phase,” “societal implementation phase,” and “maintenance and management phase,” as well as seven “items to be addressed” that apply to all three phases (see Figure 1).

The process of formulating the AI Ethical Principles involved discussion across more than 10 business divisions to ensure that the outcome was in harmony with Hitachi’s culture and operations. Trial risk assessments based on the principles were also undertaken by Hitachi research and development divisions in Japan, USA, Asia, and Europe to verify that they were workable in practice. Through this process, Hitachi was able to formulate principles that expressed concepts shared across the different business divisions and geographical regions and did so in a common language.

Training material and a risk assessment checklist linked to the principles were also developed to facilitate the operation of AI governance (see Figure 1). The following sections describe the standards of conduct and items to be addressed stipulated in the AI Ethical Principles.

Hitachi’s AI Ethical Principles identify seven “items to be addressed” that apply across the planning, societal implementation, and maintenance and management phases. These are: “Safety,” “Privacy,” “Fairness, equality, and prevention of discrimination,” “Proper and responsible development and use,” “Transparency, explainability and accountability,” “Security,” and “Compliance.” They are also further broken down into specific checks. The phases of AI development include devising innovative new ideas, research and development, PoC, and solution and system development. The intention is that projects should consider these items at each of the different phases to verify that the company is acting in accordance with the AI Ethical Principles. The seven items can also be used as a basis for gathering and classifying high-risk cases that will be of use in areas like training or the formulation of risk mitigation measures. In this way, the seven items to be addressed will be central to Hitachi’s efforts to put AI ethics into practice.

Hitachi has established a professional team of AI ethics specialists at the Lumada Data Science Lab. (LDSL), its center for AI and analytics. The team serves as a center of excellence (CoE) for AI ethics at Hitachi and includes data scientists and other staff with expertise in areas like privacy protection. It is responsible for establishing practices that facilitate compliance with the AI Ethical Principles as well as the continuous improvement of AI governance. The LDSL plays a central role in Hitachi’s staff training for maintaining AI ethics and the research and development of relevant technologies. The LDSL is also a place where AI ethics are put into practice, being host to a large number of the leading co-creation projects undertaken by Hitachi’s Social Innovation Business (see Figure 1).

Hitachi has also established an AI Ethics Advisory Board made up of outside experts to enable further improvements in its work on AI ethics based on objective assessment and with reference to views from outside the company. The advisory board is able to offer advice not only on the technology of AI, but also from other perspectives such as that of consumers or social science.

Hitachi conducts risk assessments on individual projects using a checklist developed from the items to be addressed identified in the AI Ethical Principles, which include a focus on safety, fairness, transparency, explainability, and so on. This provides an efficient way to identify the risks that arise in AI research and development or in businesses that use AI. The checklist results provide a basis for advice on what to watch out for in project execution and also assist with finding countermeasures. Managers are also able to refer to the risks uncovered by the checklist when deciding whether or not work should proceed. The AI Ethics Advisory Board of outside experts, meanwhile, is available to discuss specific projects and provide advice as needed.

Conducting these risk assessments also facilitates the collection of information on wide-ranging AI research and development and on projects that utilize the technology, and this provides a basis for governance. Moreover, working through the risk assessment process allows for the collation and consolidation of knowledge about AI ethics.

The checklist-based risk assessments are conducted at various different phases in the AI lifecycle. In the research and development phase, for example, they can be used to screen research projects when at the beginning of the projects to verify the risks and whether it is appropriate to proceed. This checklist screening is conducted for more than 600 research projects each year. Checklist-based risk management is also used during commercial deployment and conducted for each of the phases from winning the customer order to development. Approximately 100 projects are reviewed in this way each year.

Growth in the development of services, systems, and products that use AI across Hitachi’s businesses has prompted it to strengthen its AI-specific quality assurance practices. This has involved the formulation of development and quality assurance guidelines specifically for AI that are based on the Machine Learning Quality Management Guideline (AIQM)(4) of the National Institute of Advanced Industrial Science and Technology and Hitachi’s own AI Ethical Principles. The guidelines have been made available throughout Hitachi Group. The guidelines are a systematic compilation of topics that include the basic philosophies of AI quality assurance, how to develop AI models in the PoC and development phases, monitoring of prediction accuracy during operation, how to handle retraining if accuracy deteriorates, the importance of safety in relation to AI ethics, privacy protection, and achieving fairness. Along with the guidelines being available to developers during the development of systems and products that use AI, they are also used to conduct reviews where they augment the wider quality assurance process by providing a particular focus on AI.

An in-depth understanding of the AI technology is essential for complying with the AI Ethical Principles. Hitachi has established its own Data Science Community that brings together data scientists from across Hitachi Group to share their individual knowledge and know-how. The community is playing a central role in the training of approximately 3,000 data scientists with expertise in AI and data science. By training up this human capital to have an in-depth understanding of AI, Hitachi is establishing the foundations for its ethical use of AI.

Along with this AI training, Hitachi is also conducting practical training in AI ethics and taking the initiative to raise awareness of the subject within the company. This involves holding AI ethics study groups within the Data Science Community. Discussion groups are also held to keep people up to date with the latest developments in the field, covering topics such as what is happening with AI ethics in the public sphere as well as relevant research, internal governance, and regulation. These groups are open to all Hitachi staff regardless of profession or department and have been attended by more than 500 people, including senior management. The classroom training and study groups have also been augmented with workplace-based training that looks at specific use cases. Printed materials containing around 10 such AI use cases are distributed to workplaces where staff form groups of between 10 and 20 people, choosing several of use cases from this distributed resource material and then discussing the issues they raise in the context of AI ethics. More than 1,000 staff with an involvement in the research or use of AI have participated in this program.

In addition to in-house practices, Hitachi is also pursuing the research and development of technologies for ensuring AI ethics compliance based on its corporate mission of “contributing to society through the development of superior, original technology and products.” Along with AI algorithms that make AI explainable or enhance fairness by detecting and minimizing data biases, the topics covered by this research and development also include ways of monitoring models and detecting performance degradation. Work is also being done on data governance practices such as data lineage processes for achieving data traceability. Some of this research work is described in the article “Research and Development of AI Trust and Governance”(5).

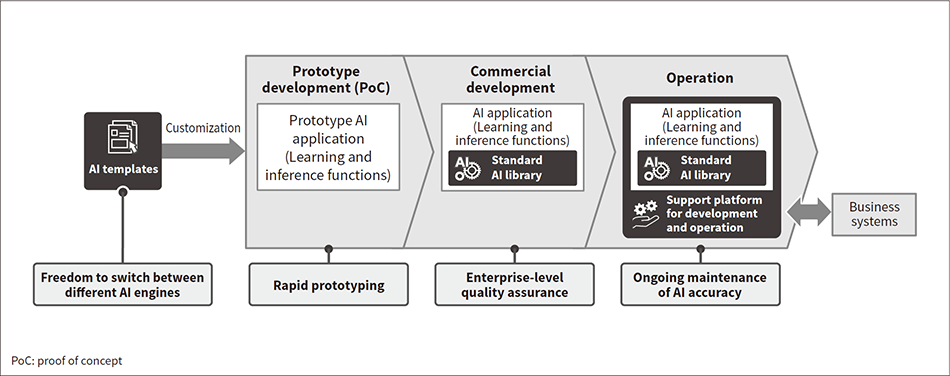

Hitachi also provides an AI application framework for faster AI implementation in corporate and social infrastructure applications that is based on the AI Ethical Principles(6) (see Figure 2).

This framework consolidates Hitachi’s expertise in the development and operation of AI and supports the development and operation of efficient, high-quality AI systems in highly specialized enterprise applications that demand a high level of knowledge. This provides ongoing monitoring and analysis of the input data and results once the AI system is in operation and the automatic detection of anomalous data or results based on predefined rules. This can prevent the degradation of prediction accuracy. If such degradation does occur, the AI application framework also provides the ability to call up a newly retrained model and to swap out the model used previously.

Figure 2 — AI Application Framework The framework consolidates Hitachi’s expertise in the development and operation of AI and supports the development and operation of efficient, high-quality AI systems in highly specialized enterprise applications that demand a high level of knowledge.

The framework consolidates Hitachi’s expertise in the development and operation of AI and supports the development and operation of efficient, high-quality AI systems in highly specialized enterprise applications that demand a high level of knowledge.

Figure 3 — Kyōsō-no-mori Webinar Hosted in October 2021 by the Kyōsō-no-mori facility in Kokubunji, Tokyo, the webinar was an opportunity to discuss AI governance with experts from both inside and outside of Hitachi.

Hosted in October 2021 by the Kyōsō-no-mori facility in Kokubunji, Tokyo, the webinar was an opportunity to discuss AI governance with experts from both inside and outside of Hitachi.

This article has described Hitachi’s principles guiding the ethical use of AI in Social Innovation Business and how these principles are being put into practice.

By formulating its own set of principles based on its corporate culture and the nature of its business, Hitachi is seeking to document its stance on actions across the company and to achieve consistency in staff understanding of the subject. Work on establishing in-house governance systems has also accelerated. There is a clear sense that the task of formulating the principles has left staff with a greater appreciation than before of AI and its uses.

There is the view that AI governance and innovation are two different things and that strengthening AI governance will pose obstacles to innovation. However, governance and principles for using AI are essential if innovation is to happen and the research, development, and application of AI can only proceed with the consent of the public based on international cooperation and agreement among stakeholders. Hitachi in the past has engaged in dialogue with stakeholders on such AI technologies as its complementary metal-oxide semiconductor (CMOS) Ising computer(7),(8) and debating AI(9). It is also striving to incorporate outside perspectives into its research and development, hosting the Kyōsō-no-mori Webinar(10) on AI governance and trust in 2021 (see Figure 3).In the future, Hitachi intends both to enhance social, environmental, and economic value through a raised awareness of the importance of working in harmony with multiple stakeholders and to contribute to the creation of a comfortable and sustainable society based on human dignity and higher QoL around the world.