[ⅱ]Autonomous Driving and Electrification for Safety and Security

Since the 1990s, Hitachi Group has been developing of autonomous driving and advanced driver support systems, mainly for automotive equipment. In order for the functions of autonomous driving and driver assistance to evolve, it is important to develop technology for sensing the driving environment. This article presents the multi-sensor configurations developed by Hitachi, and describe a stereo-camera that features three-dimensional capturing of the driving environment, a sensor fusion function using multiple sensors, and technology for implementing AI. It also describes Hitachi’s approaches in simulation and verification technologies for verifying increasingly advanced sensing systems.

Technology development and practical application of autonomous driving (AD) and advanced driver assistance systems (ADAS) is occurring at an increasingly rapid pace with the installation of sensors in vehicles for understanding the driving environment, vehicle controllers, and functions such as collision avoidance and hands-off driving on highways without driver operation. To further enhance safety and security, there is a growing need for improved sensing functions, such as detection of falling objects and sudden cut-in vehicles, which are difficult to detect with conventional sensors. In addition, as the usable range for autonomous driving functions expands, multi-sensor configurations that combine multiple sensors will become mainstream for enabling stable sensing in a wide range of driving environments.

This article describes the basic configuration of driver assistance systems and the future configuration of all-around sensing systems, and present approaches for stereo-cameras, sensor fusion, artificial Intelligence (AI), and simulation verification of increasingly advanced sensing functions as a part of the process of developing sensing technologies for various use cases.

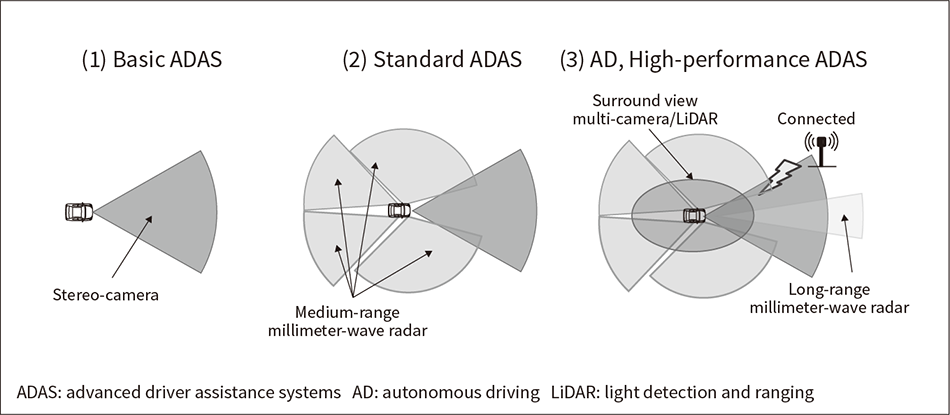

Automobile systems for sensing of the driving environment have become more sophisticated with advancements in sensor devices and microcomputer technology and with the growing needs for assessments that standardize safety functions for driver assistance and for autonomous driving that reduces the burden on the driver. Figure 1 shows the configuration of the system for sensing of the driving environment developed by Hitachi. The basic ADAS with functions such as collision damage mitigation braking, shown in (1) of Figure 1, is characterized by its ability to provide inexpensive forward sensing of the vehicle using a single stereo-camera with a stereoscopic view of a wide area in front of the vehicle. The standard ADAS shown in (2) of Figure 1, which includes an additional function to support the safety of the vehicle rear side, uses multiple medium-range millimeter-wave radars in addition to the stereo-camera to sense the entire area around the vehicle. In the AD and high-performance ADAS shown in (3) of Figure 1, a long-range millimeter-wave radar for detecting distant objects and multiple cameras or light detection and ranging (LiDAR) can be added to sense the entire surroundings for enabling even more advanced sensing functions. In sensing systems using these multiple sensors, a sensor fusion function that integrates the results of multiple on-board sensors to enable a redundant configuration where sensors complement each other to allow highly reliable sensing of the driving environment even in situations where certain sensors are impaired. The next chapter describes the features of Hitachi’s sensing system.

Figure 1 — Hitachi’s Lineup of Driver Environment Sensing Configurations The basic ADAS is implemented using a stereo-camera. A camera and radar can also be added to achieve multi-functionality and improved reliability.

The basic ADAS is implemented using a stereo-camera. A camera and radar can also be added to achieve multi-functionality and improved reliability.

Among the sensor configurations mentioned above, this chapter presents sensor fusion technology utilizing a stereo-camera for front sensing and multiple cameras and radar, and AI implementation technology for advanced recognition and decision-making in complex driving environments.

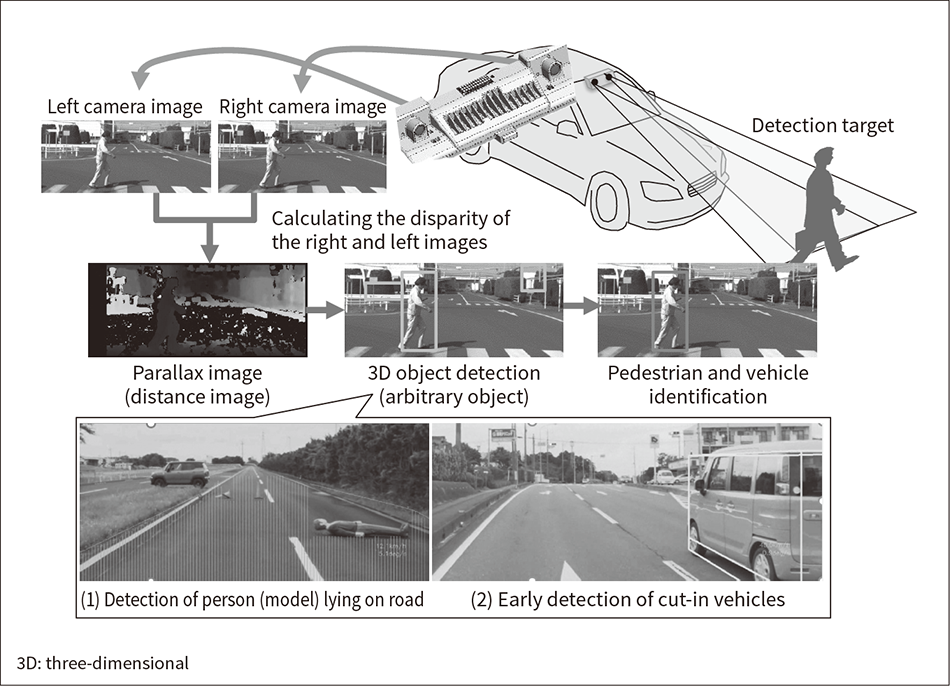

Figure 2 — Measurement Principle of Stereo-cameras Parallax images are generated from the disparity between the views of the left and right cameras, and arbitrarily shaped three-dimensional objects (1) and (2) are detected from the parallax images.

Parallax images are generated from the disparity between the views of the left and right cameras, and arbitrarily shaped three-dimensional objects (1) and (2) are detected from the parallax images.

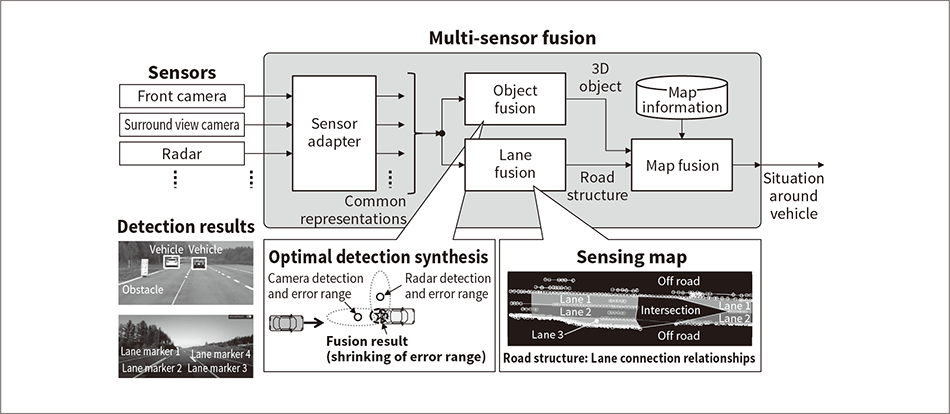

To accurately recognize the situation around the vehicle, which is necessary for making driving decisions, Hitachi is developing a multi-sensor fusion function that integrates the detection results from multiple sensors such as cameras and radar, etc. (see Figure 3).

The multi-sensor fusion function allows multiple sensors to complement each other in recognizing the situation around the vehicle, enabling reliable driving decisions even in difficult situations such as backlighting, where some sensors have impaired capability, or sensor failures. This function consists of a sensor adapter that absorbs and converts differences into common representations in the information detected by each sensor, object fusion for three-dimensional objects such as vehicles and pedestrians, lane fusion for roads such as lane markers and road edges, and map fusion for combining recognition results from sensor detection with map information. This function features “optimal detection synthesis,” which uses the detection error model of the sensors to improve the accuracy of detection over individual sensor detection by using and synthesizing the detection results for which each sensor excels, and a “sensing map,” which reconstructs map-like information from sensor information by extracting the road structure as lanes estimated from the positional relationships of lane markers and road edges detected by the sensors and their connection relationships. Optimal detection synthesis enables sensors to be added for obtaining the position and speed of three-dimensional objects more reliably and accurately, and the sensing map enables responses to road construction and other unexpected situations that are not included in the map information, thereby contributing to safe driving through continuous autonomous driving and driver assistance.

Figure 3 — Multi-sensor Fusion Function Using the detection results of three-dimensional objects and lane markers from each sensor, three-dimensional objects are integrated by object fusion, lane information such as lane markers is integrated by lane fusion, and these integrated results are combined with map information by map fusion to output the situation around the vehicle with high reliability and accuracy. In object fusion, detection accuracy is improved by utilizing the advantages of each sensor to reduce errors through optimal detection synthesis technology. Lane fusion uses sensing map technology to extract road structures such as lane connection relationships and boundaries to deal with construction and other unexpected situations that do not appear in the map.

Using the detection results of three-dimensional objects and lane markers from each sensor, three-dimensional objects are integrated by object fusion, lane information such as lane markers is integrated by lane fusion, and these integrated results are combined with map information by map fusion to output the situation around the vehicle with high reliability and accuracy. In object fusion, detection accuracy is improved by utilizing the advantages of each sensor to reduce errors through optimal detection synthesis technology. Lane fusion uses sensing map technology to extract road structures such as lane connection relationships and boundaries to deal with construction and other unexpected situations that do not appear in the map.

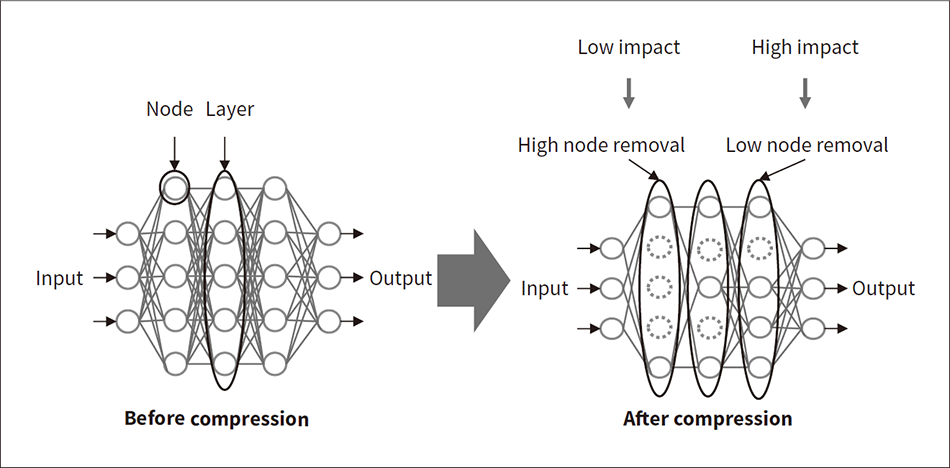

Figure 4 — Overview of AI Compression and Implementation Technology Before compression, the impact of each node on recognition accuracy is analyzed and collected by layer. Compression is performed by setting a large node removal ratio for low impact layers and by setting a small node removal ratio for high impact layers.

Before compression, the impact of each node on recognition accuracy is analyzed and collected by layer. Compression is performed by setting a large node removal ratio for low impact layers and by setting a small node removal ratio for high impact layers.

As sensing functions become more advanced, the number of functional verification items has increased significantly, and there is a limit on the testing that can be conducted using actual vehicles alone. In addition, cameras that recognize the area around a vehicle need to operate in a wide range of weather conditions and other traffic environments, and these environments must be recreated for verifying the functionality of these cameras. In sensing function verification, Hitachi is working to better recreate the driving environment as a simulation without using an actual vehicle, and has built a hardware in the loop simulation (HILS) system that uses a development environment for stereo-cameras that uses high-definition computer graphics (CG) (see Figure 5).

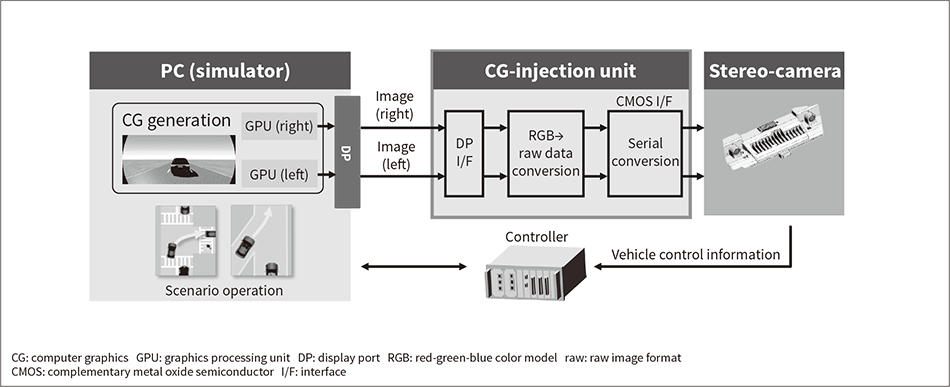

Figure 5 — Configuration of High-definition Camera HILS System The left and right images generated by the simulator are input to the camera to evaluate scenarios that are difficult to recreate using a real vehicle.

The left and right images generated by the simulator are input to the camera to evaluate scenarios that are difficult to recreate using a real vehicle.

The HILS that was developed uses high-definition images created by computer in order to match improvements in image sensor performance, such as higher resolution. To simulate the high-definition images as image data obtained from the image sensor, Hitachi developed a CG-injection unit that processes the images and transfers them to the stereo-camera memory in real time. The developed HILS environment enables simulation of various traffic environments and verification of the sensing functions of the cameras.

In functional verification, it is important to use simulations to evaluate rain, backlighting, and other conditions where cameras have difficulty performing their sensing functions. This requires realistic CG recreation that resembles the real world. Hitachi is participating in the Cabinet Office’s “Strategic Innovation Promotion Program (SIP) Phase Two: Automated Driving (Expansion of Systems and Services)” for the “Building a Safety Evaluation Environment in Virtual Space”(3) and is developing simulation technology.

In this program, physical phenomena are modeled virtually based on sensor detection principles, and a simulation model that reproduces the inside of the image sensor more accurately is being developed to build a simulation environment having high consistency with the real world for use in functional verification of cameras (see Figure 6).

The developed simulation environment will enable evaluation of scenarios that are difficult to recognize or are dangerous, and will reduce the number of tests that used to rely on actual vehicles, thereby contributing to more advanced sensing functions.

Figure 6 — Virtual Modeling Based on Physical Phenomena A simulation model having high consistency with the real world is developed and its limit performance under rain and other recognition impediments is evaluated.

A simulation model having high consistency with the real world is developed and its limit performance under rain and other recognition impediments is evaluated.

This article described Hitachi’s approach to sensing technology in AD/ADAS, which is expected to make further advances going forward. Hitachi will continue to contribute to a safer and more secure automotive society by integrating and fusing multiple sensors, such as stereo-cameras and millimeter-wave radar, to develop advanced sensing functions that will help reduce accidents and provide greater convenience.