Rising expectations are being placed on robotics as a means of overcoming labor shortages. Given the potential risks for society of events such as disasters or pandemics, there is rising demand, in particular, for robots that can reliably complete tasks at locations where humans are unable to attend. The deployment of robots in the field poses specific challenges of its own. These include how to deal with environments that differ depending on location or circumstances, how to incorporate the practical experience and expertise of skilled workers into robots, how to make use of measurements or other supply chain information, and how to equip robots with autonomy and “collaborative-safety” control functions that allow them to work safely and harmoniously alongside humans. With the aim of accelerating the digitalization of field work, Hitachi has responded by developing ways of reducing the amount of teaching required to program robots for operation under a range of potential scenarios as well as techniques for ensuring that robots operate safely when confronted with unexpected situations. This article describes the concept of field robotics and its core technologies.

Robotics has become the subject of extensive research aimed at boosting productivity, not only in industry, but also in society as a whole. The objectives include alleviating the worsening labor shortages that accompany aging demographics and a low birthrate. This is coming as the use of robots has been identified as a policy issue by national governments around the world, notably Germany (with Industrie 4.0) and Japan (with Society 5.0). As a result, the market for robot-related products is expected to continue growing, with Japan’s Ministry of Economy, Trade and Industry estimating it will expand to around 5.3 trillion yen by 2025(1). This has prompted companies from a range of industries to launch various new ventures over recent years.

Meanwhile, the spread of COVID-19 has accelerated the practical deployment of robots. The rapid expansion of the need for person-less and non-contact ways of doing things has promoted the use of autonomous or remotely operated robots in various places such as offices and public facilities that can undertake tasks without humans going to the site, encompassing many applications such as transportation, customer relations, cleaning and decontamination, and security. This normalization of robots will likely become even more pronounced in the post-COVID world as coexistence with robots becomes a feature of urban and societal design and the establishment of a legal framework is accelerated.

In this way, the use of robots is expected to expand beyond the traditional restricted environments provided for them at factories or warehouses and into society as a whole. Amid shortages of labor, the construction and maintenance industries in particular are seen as having considerable scope for the use of robots to address issues such as urbanization, providing infrastructure and dealing with the rapid aging of existing infrastructure, and improving the quality of work (QoW) in the field. In the case of robots that operate in the field, the requirements go beyond their traditional ability to perform simple repetitive tasks quickly and accurately, with particular importance being placed on their performance in situations where they operate alongside people. In particular, this includes their ability to complete tasks correctly without the need for complicated operations or instructions, and that they have the resilience to continue working reliably even when something unexpected happens. The term “field robotics” refers to the next generation of robotics for dealing with a wide variety of objects in diverse environments. Hitachi is developing a platform for implementing such field work solutions.

This article looks at the core technologies of field robotics, specifically those that underpin new ideas that break from past practice, as well as the outlook for the future.

A robot is an intelligent integrated system made up of sensing, decision-making (intelligent information processing), and actuation. Operating at the frontier of intelligent machines doing the work of humans, Hitachi has developed a wide range of robots over the years to meet the needs of the time(2). This has included commercializing special-purpose automated machinery and multi-purpose robots in the early years of robotics dating back to the 1960s, as well as the development during the 80s and 90s of a two-leg/four-leg walking robot, a manipulator for a space robot, and a brain surgery robot. As a forerunner of what later came to be called cyber-physical systems (CPS), Hitachi in the 2000s introduced the concept of a human-symbiotic robotics platform intended to allow robots to operate in the same spaces as people. This was aimed at creating a society characterized by vibrant interaction among people, things, and information and led to Hitachi’s development of a humanoid robot for guidance, a logistics support robot, and a ride-on mobility support robot. Hitachi also commercialized a communication robot in 2020.

Hitachi is now developing field robotics in an effort to strengthen its solution platforms, which provide resilience in a world afflicted by unpredictable events such as earthquakes or pandemics, not to mention societal challenges like labor shortages caused by aging demographics and a low birthrate. The objective of field robotics is to enhance human-symbiotic CPS developed in the past to work alongside people by upgrading both their physical components and those functions that handle physical information in cyberspace. The end result should be a system that can digitize and simulate tasks in cyberspace to perform those tasks autonomously in changeable real-world environments while also sharing information and collaborating with humans or other robots. The following section describes the core technologies for overcoming challenges like these.

Conventional robotics requires both programming on how to respond to various real-world situations and teaching of a large number of operating sequences. This puts practical limits on what tasks robots can be assigned. In response, Hitachi is developing techniques for field robotics that reduce the amount of programming and teaching required. The following sections describe techniques that use deep learning to recognize objects and acquire autonomous behavior, autonomous motion generation by imitation learning, and a reflexive action control system that keeps the robot safe and stable when the unexpected happens.

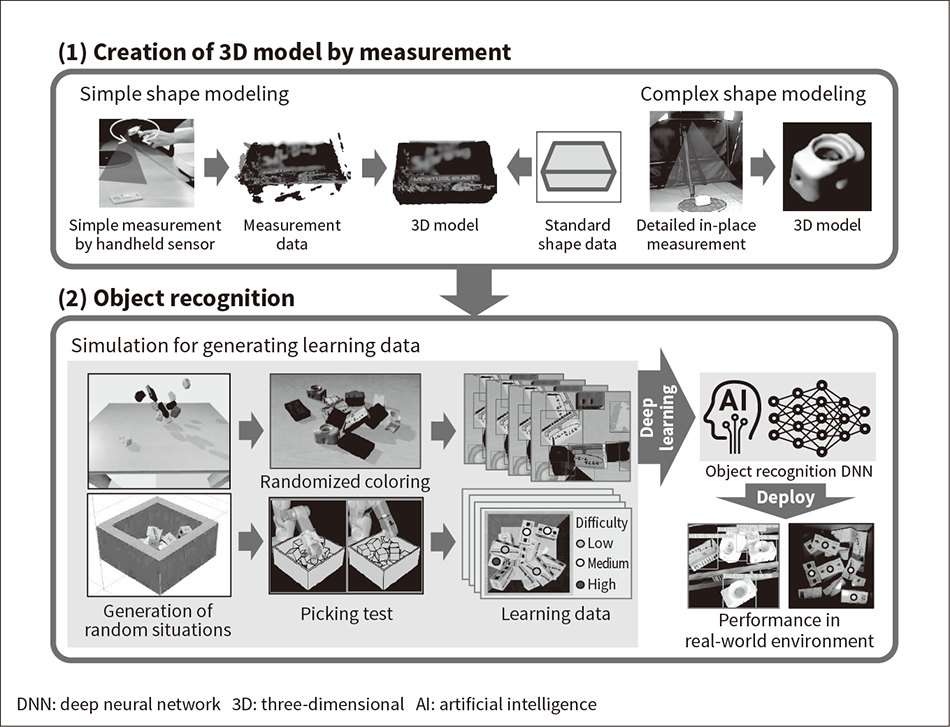

Figure 1 — Simulation-based Deep Learning Technique

This technique uses a sensor to measure an object and create a 3D model. The model is then used in simulations to generate learning data automatically.

This technique uses a sensor to measure an object and create a 3D model. The model is then used in simulations to generate learning data automatically.

Diverse and large quantities of learning data need to be obtained if robots are to reliably recognize the objects they work with in the changeable conditions (surroundings, lighting, and so on) found in real-world environments. As the associated work of setting up the scenes, recording the images, and pointing out the relevant objects has to be done by humans, the high cost of this has traditionally posed a problem. To overcome this, Hitachi has developed a technique that uses sensors to measure objects and create a three-dimensional (3D) model that can then be used in simulations to auto-generate learning data (see Figure 1). By using standard shapes, highly accurate models can be created by simple measurements performed using a handheld sensor(3) and learning data generated by making random changes to the color of objects or how they are stacked on top of one another. By doing so, recognition can achieve the robustness needed to cope with the variability of the real world. A technique was also developed that successfully automated the task of teaching robots to deal with objects, using robot motion planning to test picking actions in a simulation(4).

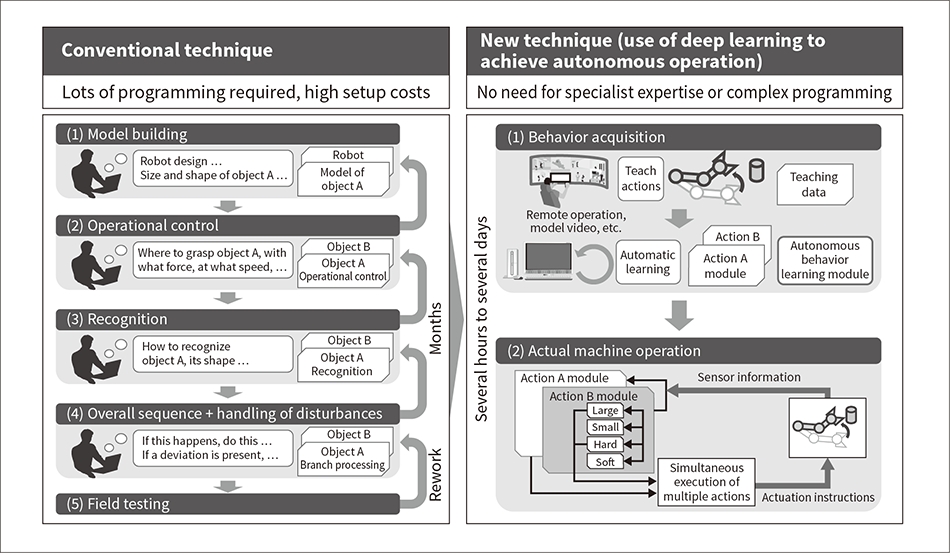

With conventional technology, it is necessary to identify a method for recognizing likely situations and specifying what to do in each case, work that requires a large amount of programming to be done. As a result, it can take several months to get a robot up and running. In response, Hitachi has developed a deep learning technique that can equip robots with autonomous operation using simple procedures without the need for programming. Deep learning works by training on input and output data (teaching data) to automatically generate a complex model of the relationships between input and output. This provides it with an ability to generalize whereby, even in situations for which it has not been trained, it can generate appropriate outputs by analogy with situations for which it has been trained. This learning prevents robot performance from being compromised by differences in things like lighting levels or the shape of an object being held (see Figure 2).

Figure 2 — Comparison of New Technique (Use of Deep Learning to Teach Autonomous Behavior) and Past Practice

The diagram shows the sequence of steps required to get the robot to perform an operation using the new deep learning technique for teaching autonomous behavior and how it was done previously.

The diagram shows the sequence of steps required to get the robot to perform an operation using the new deep learning technique for teaching autonomous behavior and how it was done previously.

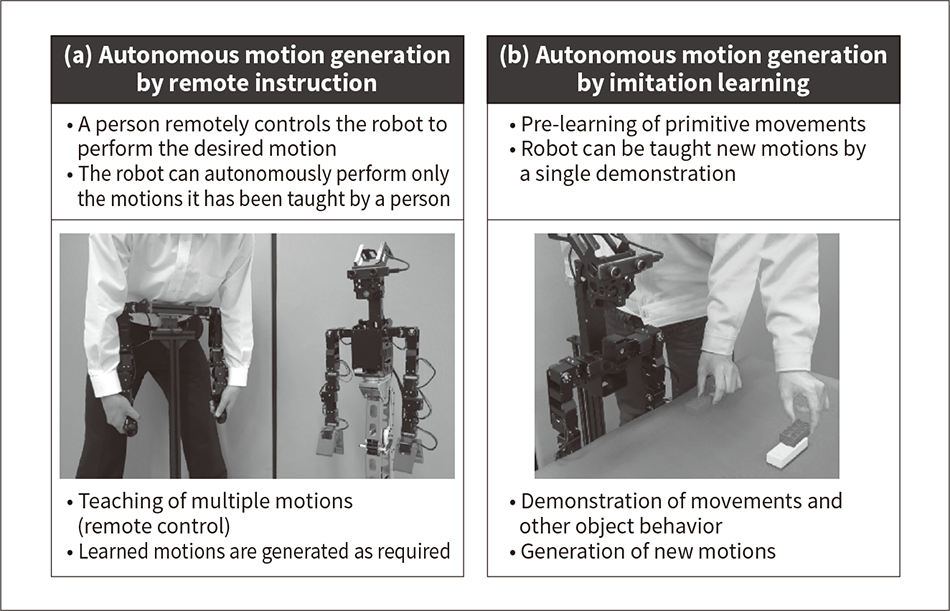

The way people typically acquire a new manual skill is by imitating the same action performed by others and then repeatedly practicing it until it feels natural. To get robots to learn the same way, Hitachi has developed a technique whereby the action is performed a single time by a human so that the robot can imitate it. In the past, when a need arose in the field to quickly get a robot to perform a particular action, it was necessary to actually operate the robot several times by remote control to obtain teaching data [see Figure 3 (a)]. To make this easier, and to equip robots with the ability to generalize so that they can cope with unknown situations or disturbances, imitation learning uses deep learning to train the robot beforehand on a number of primitive movements. It then identifies the deep learning parameters needed for the robot to autonomously replicate the particular action performed by the human. The preliminary learning of primitive movements collects images of the robot at each control interval and the angle of each joint. The main data collected when moving the robot arm are the joint angles. When performing an action on an object, it is the position and orientation of the object that are collected. The robot then automatically replicates the action that most closely resembles the action performed by the human [see Figure 3 (b)](5). This makes it possible, for example, to get the robot to perform some new task in the field simply by having a skilled worker give a demonstration.

Figure 3 — Autonomous Motion Generation by Remote Instruction and Imitation Learning

The photographs compare remote instruction, whereby the robot is made to repeat the action a number of times under remote control, with imitation learning in which the robot draws on pre-learned primitive movements to automatically generate motion that approximates that acted out by a person.

The photographs compare remote instruction, whereby the robot is made to repeat the action a number of times under remote control, with imitation learning in which the robot draws on pre-learned primitive movements to automatically generate motion that approximates that acted out by a person.

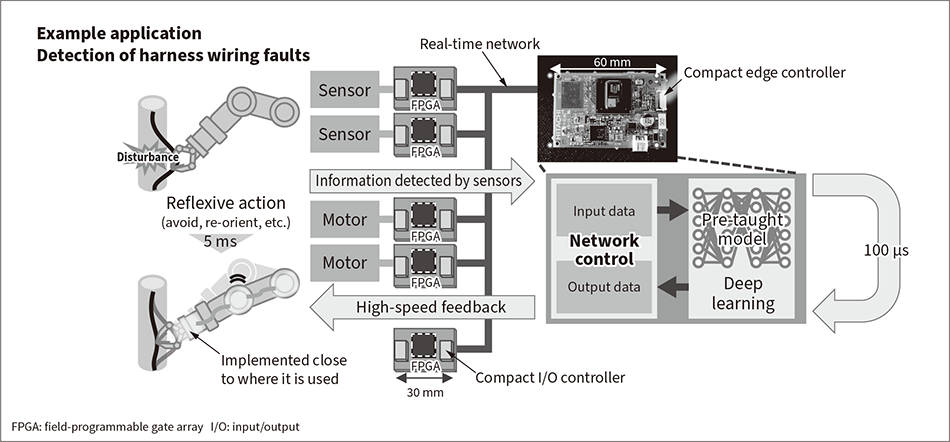

Figure 4 — Reflexive Action Control System (High-speed Feedback System)

The high-speed feedback control system is made up of a compact edge controller that can execute deep-learning-derived control at high speed; a small I/O controller for sensors, motors, and other I/O; and a real-time network linking them together.

The high-speed feedback control system is made up of a compact edge controller that can execute deep-learning-derived control at high speed; a small I/O controller for sensors, motors, and other I/O; and a real-time network linking them together.

As the real world is subject to unanticipated changes, robots require a capacity for reflexive action to prevent them from making errors or from being knocked over, and ultimately to maintain safety and stability without damaging, dropping, or spilling whatever they are working with. Unfortunately, the problem with incorporating deep learning control for triggering reflexive action into existing robot control systems is that it cannot achieve the rapid (millisecond-order) control needed for safe robot operation, with control cycle times typically on the order of hundreds of milliseconds. To overcome this, Hitachi has developed a high-speed feedback control system made up of a compact edge controller that can execute the deep-learning-derived control at high speed and a small input/output (I/O) controller for sensors, motors, and other I/O. These controllers are linked together in a real-time network. The system achieves high speed by implementing the hardware circuits on field-programmable gate arrays (FPGA) in the controllers. The system has demonstrated its ability to respond to external stimulus in just 5 ms(6) (see Figure 4).

Hitachi aims to use the core technologies described above, most of which relate to automation, to deploy field robotics in sectors such as infrastructure, healthcare, and building services. As the tasks and working environments in applications like this differ from site to site, it is likely that many areas remain that are dependent on the practical know-how of people and where automation has yet to be adopted. Unlike a factory production line, for example, the equipment and materials dealt with in infrastructure work varies from site to site and actual dimensions at a particular site may differ from those on the plan depending on things like the quality of the installation work. Working in disaster recovery or other harsh conditions calls for a high level of on-site decision-making and situations are likely to arise where robots need to coordinate their activities to undertake complex tasks on unknown objects.

One example of work under harsh conditions is the decommissioning of nuclear power plants. As people are unable to spend long periods working in the presence of radiation, this creates a need for remotely operated robots and Hitachi has developed a technique for the safe operation of manipulators(7). This involves work on techniques for making the system more radiation-hardened by controlling the robot in real time using imaging sensors located at a distance rather than equipping it with sensors that feature electronic devices, and on assisting operation by mapping the three-dimensional shape and layout of the work environment to facilitate access to the work site and to avoid collisions with nearby structures.

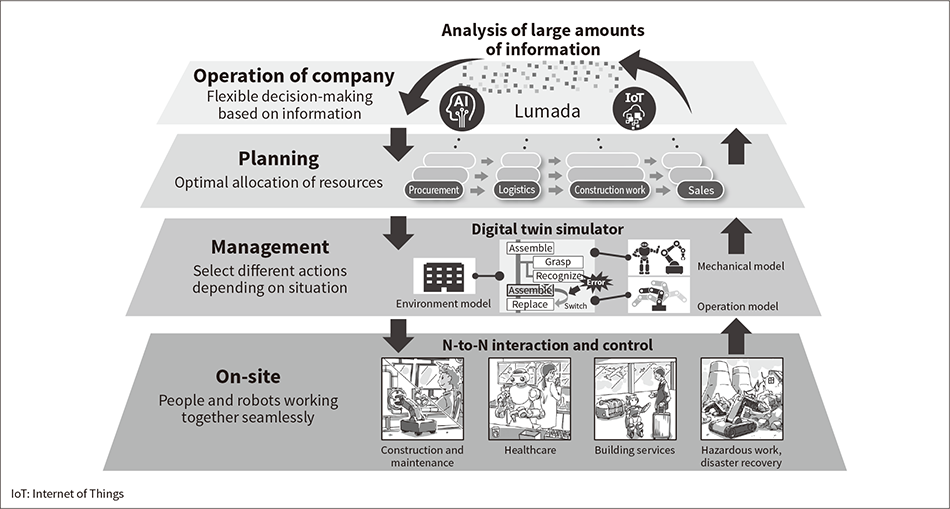

Prompted by the desire to improve labor productivity while keeping things spread out and avoiding close contact, there are signs that the pandemic is reforming business practices through the use of automation in applications such as logistics and production lines. Regardless of the industry concerned, the key lies in equipping robots with the autonomy and cooperative capabilities needed to deal with changes in their working environments. By using the teaching and control techniques described here, it should be possible to implement intelligent functions at the network edge and build systems that are both large in scale and able to respond flexibly to change. While it is not specifically covered in this article, supporting technologies relating to the physical environment will also be essential to robot operation. Examples include mechanisms whereby robots can share maps and other information about the locations where they operate and “collaborative-safety” control techniques that allow them to work safely alongside people. The objective is also to integrate with systems that manage information from the field that is held in cyberspace (such as that relating to equipment or the supply chain). Achieving this objective improves overall operational efficiency, including by reducing the manpower or time required to get work done, and equips systems with the resilience to recover quickly from disasters or other abnormal events (see Figure 5).

Figure 5 — Integration of Cyber and Physical Systems

The objective is to improve efficiency and achieve resilience in the field by integrating cyber-systems for field management with on-site physical systems so they can utilize each other’s data.

The objective is to improve efficiency and achieve resilience in the field by integrating cyber-systems for field management with on-site physical systems so they can utilize each other’s data.

This article has given an overview of field robotics and the background to its development. Hitachi plans to make use of the technologies it has developed in a wide range of fields to help create a society in which both robots and people feature actively.

Development of the robot behavior learning and motion generation techniques described in this article was undertaken with assistance from Professor Tetsuya Ogata of Waseda University. The authors would like to take this opportunity to express their deep gratitude.