The aim of the new technology is to automate various worksites

September 29, 2021

Hitachi, Ltd. and a research group led by Professor Tetsuya Ogata of Waseda University’s Faculty of Science and Engineering have developed a deep learning-based robot control technology that helps to simplify complex operations, with the aim of introducing automation at worksites where it was not previously possible to do so due to the limitations of robots’ operational capabilities. This technology enables robots to learn how to handle objects with variable shapes (e.g., installing cables and covers, handling liquids and powders) without the need for programming by automatically extracting information about the characteristics of the robot’s hands and the target object based on learning. Moreover, based on language instructions that include the physical characteristics of the target object (color, shape, etc.) and operation details (gripping, pushing, etc.), it is possible for robots to execute unlearned operations by means of association. Going forward, Hitachi aims to create systems that will support human tasks by automating various fieldwork for where robots have not yet been introduced and help resolve labor shortages related to the aging of society and declining birthrate.

The National Institute of Advanced Industrial Science and Technology’s AI Bridging Cloud Infrastructure (ABCI) was used for learning of robot operations.

Some of the findings from this research were presented at a workshop*1 during the 2021 International Conference on Robotics and Automation (ICRA 2021), held from May 31 to June 6, and during a poster session at the Japan Society of Mechanical Engineers’ Robotics and Mechatronics Conference 2021 (ROBOMECH2021), held from June 6 to 8.

The newly developed deep learning-based robot control technology consists of the following technologies.

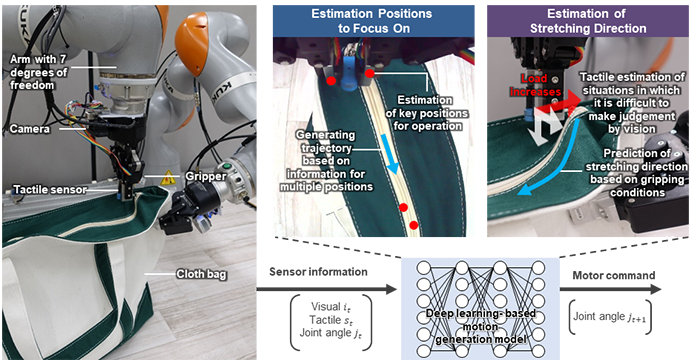

With conventional robot control technology, all operations that may be imagined for the target object’s orientation and shape are hand crafted by human experts based on information obtained using object recognition (trajectory planning). This is a problem for operations involving the manipulation of variably shaped objects whose shape changes due to contact, such as fabric or string, since it is difficult to implement all situations in the program. With the newly developed technology, after a person simply teleoperate a robot to perform the desired operation multiple times, it will automatically extract the information to focus on (target object’s position, direction, etc.) from the available visual and tactile information and the operations it should perform at a given time,*2 making it possible to execute operations for new situationsthat occur while working with variably shaped objects without the need for programming.

Figure 1: Mechanism of Motion Generation Technology That Automatically Extracts Key Information for Trajectory Planning

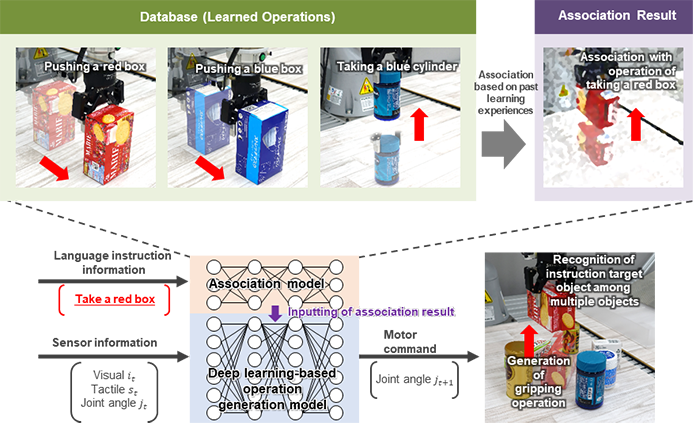

To realize the desired operation based on language instructions from a person (information that people can understand, such as speech, text sentence, etc.), everything related to language l instructions and operations previously had to be programmed by human experts. As a result, each time an operation or target object was added, it was necessary to associate it with the language c instructions. With the new technology, the robot operation, target object, and relation to the language instructions are learned and stored in a database. Hitachi has developed technology that autonomously executes the desired operation based on unlearned language instructions by inputting associated results for similar operations stored in the database into an motion generation model, based on language instructions containing the target object’s physical characteristics (color, shape, etc.) and the operation details (gripping, pushing, etc.). As a result, it is no longer necessary to program all relations between the language instructions and operations, making it possible to expand the scope of operation variations that may be handled.

Figure 2: Mechanism of Technology for Association with Instruction Target Object and Operation

For more information, use the enquiry form below to contact the Research & Development Group, Hitachi, Ltd. Please make sure to include the title of the article.