Contributions to Realize Trustworthy AI

January 31, 2022

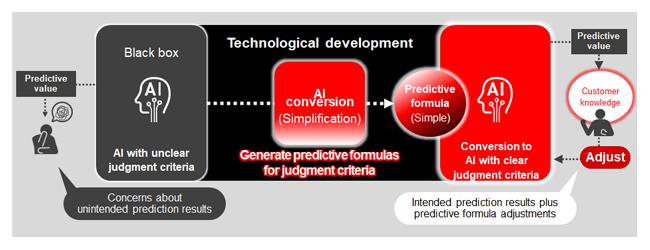

Fig 1-1. Outline of AI Simplification Technology

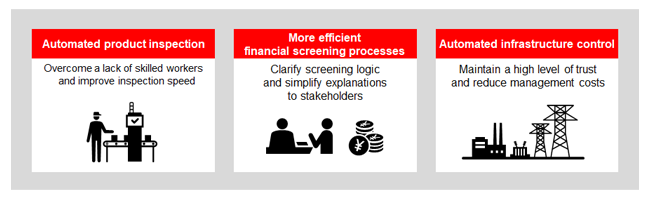

Fig 1-2. Potential Applications

Hitachi, Ltd. has developed AI simplification technology to convert conventional black-box Artificial Intelligence (AI) to AI with clear judgment criteria. Conventional black-box AI comprises complex algorithms to enhance prediction accuracy, but black-box models come with uncertainty and the risk of unintended prediction results due to unclear judgment criteria for unknown data. However, the AI converted using this technology creates simple predictive formulas accessible to human understanding for all input to provide prediction results founded in clear judgment criteria. Moreover, everyone can have confidence using AI while maintaining and improving prediction accuracy because every customer can adjust these predictive formulas based on their own knowledge and experience, which will contribute to the realization of trustworthy AI*1.

The Hitachi Group has implemented some of this technology into its own automated lines for inspection before product shipments. It has proven effective in both overcoming a lack of skilled workers as well as increasing inspection speed. In the future, Hitachi will introduce trustworthy AI into a variety of regions from manufacturing and finance to infrastructure control, which in turn will help accelerate a digital transformation throughout society at large.

Furthermore, this technological development is an effort undertaken in accordance with the transparency, explainability and accountability items outlined by the Hitachi Principles Guiding the Ethical Use of AI*2.

The corporate business environment is rapidly changing today from an accelerated digital revolution represented by the metaverse to the growing severity of global environmental issues and the COVID-19 pandemic. Hitachi has striven to research and develop practical and trustworthy AI to support the rapid digital transformation of customers in this business climate.

The progress of deep learning and other such technologies thus far has enhanced the prediction accuracy of AI. However, trustworthy AI must fulfill various other requirements, such as explainability, transparency, quality, and fairness, in addition to accuracy. Black-box AI in particular is made up of many variables and complex algorithms to increase accuracy, which are hard for customers to understand. This has led to criticism about a lack of explainability that prevents customers from confidently using AI in business operations. To address this issue, Hitachi analyzed the judgment criteria of AI from many different perspectives to develop eXplainable Artificial Intelligence (XAI) and investigated the viability of applications in various customer operations*3. As a result, we discovered customers could not trust or warrant the use of AI and had to take a lot of time to implement countermeasures when AI outputs unexpected prediction results under certain circumstances, even if the prediction accuracy is high and the judgment criteria can be explained to them.

This is why Hitachi capitalized on its vast expertise gained by supporting the digital transformation of customers in a wide range of fields to develop AI simplification technology that converts complex black-box AI into AI with clear judgment criteria as a new XAI system.

Some of this research and development was presented at the IEEE SSCI 2021 held online from December 4 to December 7, 2021.

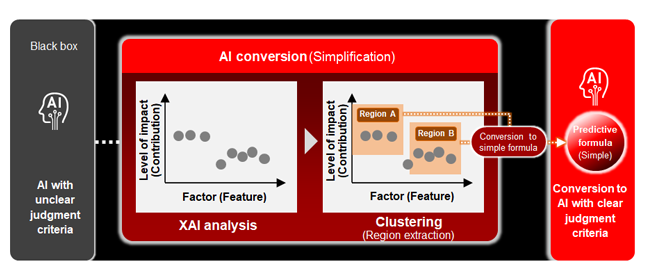

Deep learning models, gradient boosting decision trees*4, and other processes and methods have highly accurate prediction capabilities but become black boxes. Hitachi has developed technology to convert these complex black-box AI into AI with clear judgment criteria (simple predictive formulas). First, conventional XAI analysis technologies calculate the level of impact (contribution) that factors (features) have on the prediction value for various AI data input. Next, clustering technology extracts a region of input data that consistently contributes to the prediction value even as the features change. We can simplify the judgment criteria for the input data region that is extracted and convert the AI to a simple predictive formula because those features do not influence the prediction value. This process is repeated for every input data region to convert all regions into simple predictive formulas that customers can understand.

Fig 2. Black-box AI Conversion Technology

Hitachi has also developed methods for customers to adjust predictive formulas based on their knowledge and experience while communicating and coordinating with the AI to reach the necessary prediction accuracy. These methods can adjust the threshold and simple predictive formula in each region acquired using the conversion technology in (1) above.

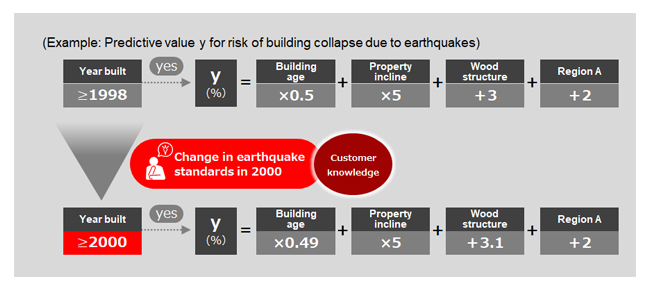

Fig 3. Example of AI with Clear Judgment Criteria

(Before Adjustment)

A. Customers can use their knowledge to adjust the threshold value for separating the AI input data regions explained in the technology for (1) above. For example, a customer can adjust the threshold value according to the 2000 change in earthquake resistance standards by switching the AI judgment criteria from 1998 to around 2000 as shown in Figure 3-A. By incorporating customer knowledge into the threshold value in this way, customers can improve the prediction accuracy in regions with a minimal amount of data.

Fig 3-A. Adjustment Method 1

(Threshold Value Adjustment Leveraging Customer Knowledge)

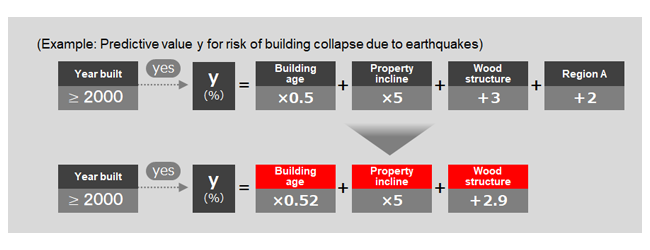

B. Customers can set specific algorithms according to each process as the predictive formula for each region. A customer can designate a simpler algorithm to prioritize the simplicity of the predictive formula over the prediction accuracy. For example, a customer can change “Predictive formula: y = Building age × 0.5 + Property incline × 5 + [If Wooden structure + 3] + [If in Region A +2] + …” to three or less variables if too complex as shown in Figure 3-B.

Fig 3-B. Adjustment Method 2

(Adjustment to Simplify Formulas by Defining Functions)

Customers can easily reflect their knowledge and experience in the predictive formulas precisely because each is made so simple using the technology in (1) above. This ability for customers to adjust the predictive formulas themselves facilitates simple and fast configuration of trustworthy AI.

For more information, use the enquiry form below to contact the Research & Development Group, Hitachi, Ltd. Please make sure to include the title of the article.