8 August 2019

By Hiroki Ohashi

Research & Development Group, Hitachi, Ltd.

Human-action recognition (HAR) can be used in a wide range of applications such as life log, healthcare, video surveillance, and worker assistance. Recent advances in deep neural networks (DNN) have drastically enhanced the performance of HAR both in terms of recognition accuracy and coverage of the recognized actions. DNN-based methods, however, sometimes face difficulty in practical deployment, for example, a system user may want to change or add target actions to be recognized but this is not so trivial for DNN-based methods to do so since they require a large amount of training data of the new target actions.

Zero-shot learning (ZSL) is a technology with enormous potential to overcome this difficulty of dependence on training data when recognizing a new target class. Whereas in normal supervised-learning setting, the set of classes contained in test data is exactly the same as that in training data, it is not the case in ZSL; test data includes "unseen" classes, of which instances are not contained in training data. In other words, if Ytrain is a set of class labels in training data and Ytest is that in test data, then Ytrain=Ytest in normal supervised-learning framework, while Ytrain≠Ytest in ZSL framework. Unseen classes are classified using attributes together with a description of the class based on the attribute, which is usually given based on external knowledge. Most typically, it is manually provided by humans. The attribute represents a semantic property of the class. A classifier to judge the presence of the attribute (or the probability of the presence) is learnt using training data. For example, the attribute of "striped" can be learnt using the data of "striped shirts", while the attribute of "four-legged" can be learnt using the data of "lion." Then an unseen class "zebra" can be recognized, without any training data of the zebra itself, by using these attribute classifiers as well as the description that zebras are striped and four-legged.

The idea of ZSL has also been applied to human action recognition. While these have indeed demonstrated the capability to recognize unseen actions, unfortunately, the attributes used in these studies are relatively task-specific and not so fundamental as to be able to recognize a wide variety of human actions. If a more fundamental and general set of attributes are utilized however, the potential to recognize a truly wide variety of actions can be substantially increased. To this end, we believe the status of each human-body joint is an appropriate attribute since any kind of human action can be represented using the set of each body joint’s status.

Sophisticated vision-based methods for estimating the status of body joints exist but the problem of occlusion needs to be addressed in those approaches. Moreover, they are not suitable for the applications in which the target person moves around beyond the range of camera view. As we are aiming to develop a method that flexibly recognizes a wide range of human actions with ZSL, we are using wearable sensors which are free from the occlusion problem to estimate the status of all the major human body joints.

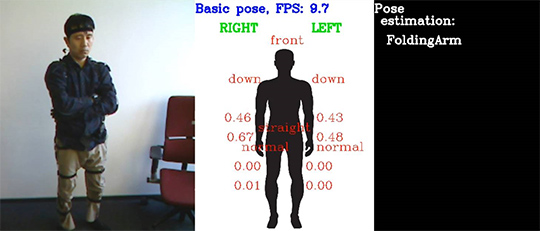

In this post, we describe the first steps in our studies focusing on the classification of static actions, or poses. We are finding that the biggest challenge in zero-shot pose recognition is the intra-class variation of the poses. The difficulty of intra-class variation in general action recognition has been discussed in prior work [1]. For example, when "folding arms," one person may clench their fists while another person may not. Liu et al.[1] introduced a method to deal with the intra-class variation by regarding attributes as latent variables. However, this was for normal supervised learning and so the implementation in ZSL scenario was a naïve nearest-neighbor-based method that did not address the problem. The intra-class variation becomes an even greater problem in ZSL when fine-grained attributes like each body joint’s status are utilized. This is because the value of all attributes should be specified in ZSL even though some of the attributes may actually take arbitrary values. It is difficult to uniquely define the status of hands for "folding arms," but the attribute of "hands" cannot be omitted because it is necessary for recognizing other poses such as "pointing." Conventional ZSL methods have dealt with all the attributes equally even though some of them are actually not as important for some of the classes. This sometimes causes misclassification because a metric (e.g., likelihood, distance) to represent that a given sample belongs to a class can be undesirably influenced by irrelevant attributes. So, our latest approach has been to solve the problem by taking the importance of each attribute for each class into account when calculating the metric.

The effectiveness of this method is demonstrated on a human pose dataset collected by us that is named Hitachi-DFKI pose dataset, or HDPoseDS, for short. HDPoseDS contains 22 classes of poses performed by 10 subjects with 31 inertial measurement unit (IMU) sensors across full body. To the best of our knowledge, this is the richest dataset especially in terms of sensor density for human pose classification based on wearable sensors. Due to its sensor density, it gives us a chance of extracting fundamental set of attributes for human poses, namely the status of body joints. It is therefore, the first dataset suitable for studying wearable-based zero-shot learning in which a wide variety of full-body poses are involved. We are making this dataset publicly available to encourage the community for further research in this direction.

For more details, we encourage you to read our paper, Attributes’ Importance for Zero-Shot Pose-Classification Based on Wearable Sensors, which was published in Sensors 2018, 18(8), 2485.

The main contribution of this study is two folds. (1) We present a simple yet effective method to enhance the performance of ZSL by taking the importance of each attribute for each class into account. We experimentally show the effectiveness of our method in comparison to baseline methods. (2) We provide HDPoseDS, a rich dataset for human pose classification suitable especially for studying wearable-based zero-shot learning. In addition to these major contributions, we also present a practical design for estimating the status of each body joint; while conventional ZSL methods formulate attribute-detection problem as 2-class classification (whether the attribute is present or not), we estimate it uner the scheme of either multiclass classification or regression depending upon the characteristics of each body joint.