27 August 2020

Hiroki Ohashi

Research & Development Group, Hitachi, Ltd.

In this blog, I’d like to introduce the idea of a widely applicable human-activity-recognition (HAR) method for AI-based worker assistance in industry.

Human-activity recognition (HAR) has a wide range of applications in industry such as worker assistance, video surveillance, life log, and healthcare. Recent advances in deep neural networks (DNN) have drastically enhanced the performance of HAR both in terms of recognition accuracy and coverage of the recognized activities.

Conventional systems, however, sometimes face difficulty in practical deployment. It is necessary to re-build a new model almost from scratch to make them work for another set of activities or in a different environment since they are typically designed to recognize only a predefined set of activities in a specific environment (e.g. in a particular factory). This costly and time-consuming process prevents HAR systems from being rapidly and widely deployed in industry.

Our goal is to design a framework for zero-shot human activity recognition that overcomes this drawback and realize an easy-to-deploy system. Zero-shot learning is a technology that aims to recognize a target with no (zero) training data. The key idea in this research is to recognize complex activities based on the combinations of simpler components, i.e. actions and objects involved in the activities. Under this framework, any kind of activities that are represented by a combination of predefined actions and objects can be recognized on the fly without any additional data collection and modeling.

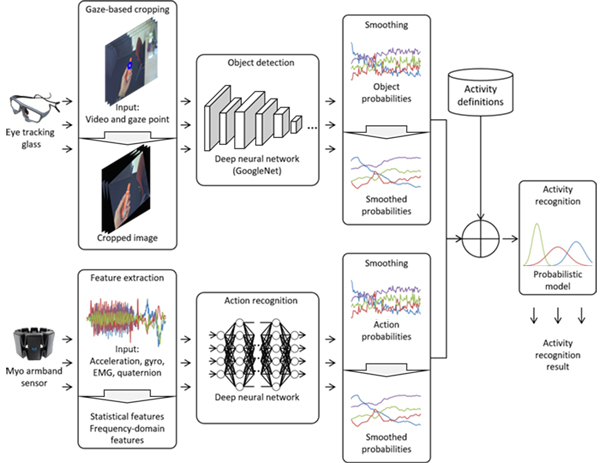

In this study, we use two wearable sensors, namely armband sensor and eye-tracking glass (ETG), for recognizing the basic objects and basic actions, respectively. Figure 1 shows the overview of the proposed model. Here, “action” is defined as a simple motion of body parts such as “raise an arm”, while “activity” is defined as a complex behavior that typically involves interaction with an object such as “tighten screw” or “open a bottle”. This study introduces a DNN based action recognition method based on armband sensor and a gaze-guided object recognition method using ETG.

Figure 1: System overview. Object recognition module takes gaze-guided egocentric video and output the probabilities of basic objects. Action recognition module takes multi-modal armband signals and output the probabilities of basic actions. Activity recognition module process these probabilities to output the activity label.

We tested the effectiveness of using pseudo industrial data. The experimental results showed that the accuracy of the two basic recognition methods was reasonably high, and the activity recognition method based on these two basic modules achieved about 80% both in precision and recall rate. This performance was comparable to the baseline method based on supervised learning (i.e. with training data) although our method did not use any training data for the activities.

For more details, we encourage you to read our paper presented in 10th International Conference on Agents and Artificial Intelligence (ICAART 2018).

This research was conducted in collaboration with the German Research Center for Artificial Intelligence (DFKI) while the author was an employee of Hitachi Europe GmbH. We thank all the people who contributed to this research especially at DFKI and Hitachi Europe.