30 November 2020

Shuyang Dou

Research & Development Center, Hitachi Asia Ltd.

The market for intelligent transportation systems (ITS) is growing quickly because of the enormous innovations in recent years based on artificial intelligence (AI) technology, especially in the area of video analytics. In general, ITS contains the functions of sensor monitoring, incident detection, object recognition, etc., and vehicle detection is a common task, especially within a vision based ITS. In this blog, I will introduce our investigation into the performance of vehicle detection by utilizing RGB-D sensor data collected by a stereo camera.

Why are we tackling the problem of vehicle detection when there are already many sophisticated approaches? Well, the main reason is that complex real-world situations present some challenges to existing methods that were developed in a simple experimental environment. For example, lighting conditions can be quite poor when the sky is heavily clouded, or during early morning/evening. Under poor lighting conditions, the captured video data contain relatively less information and just as it is for the naked eye, this can affect vehicle detection performance. To compensate the limitation of RGB sensors under such poor lighting condition, we employed a depth sensor to provide additional information.

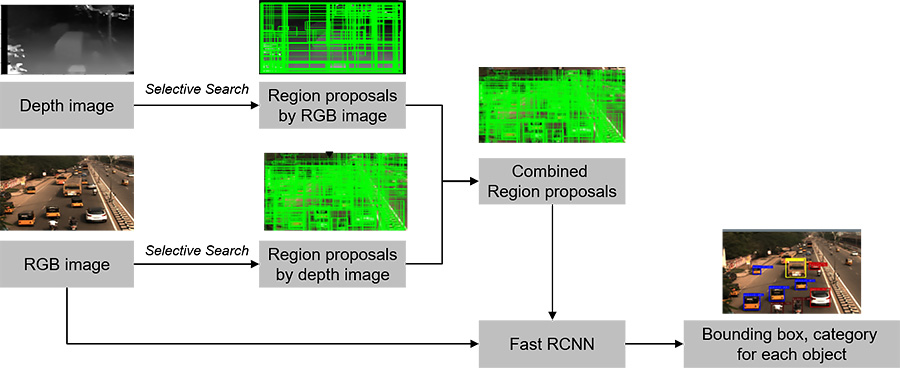

Figure 1: Architecture of our proposed RGB-D vehicle detection approach

So, how can we utilize both the RGB data and depth data for vehicle detection? This will depend on the architecture of the algorithm. We chose a region-based detection method, Fast RCNN [1] as our backbone. In a region-based approach, first we generate many region proposals (candidates of regions) from an input image using algorithms such as the Selective Search method [2], and then we check each region to see whether it contains any object or not by evaluating the image features within the region. Usually, image features are extracted by some Convolution Neural Network (CNN), such as VGG16 [3] or ResNet50 [4], etc. It is obvious that in a region-based approach, the region proposal step is quite important because if we miss some regions here, there will be no opportunity to regain them in later steps. Under poor lighting conditions, we are likely to miss some regions due to lack of RGB sensor data in such regions. Thus, in our approach (Figure 1), we extracted region proposals from both RGB image and depth image to avoid the “region missing” problem. The extracted region proposals and RGB image are then fed into the Fast RCNN network to generate the detection results (bounding box and category information for each object).

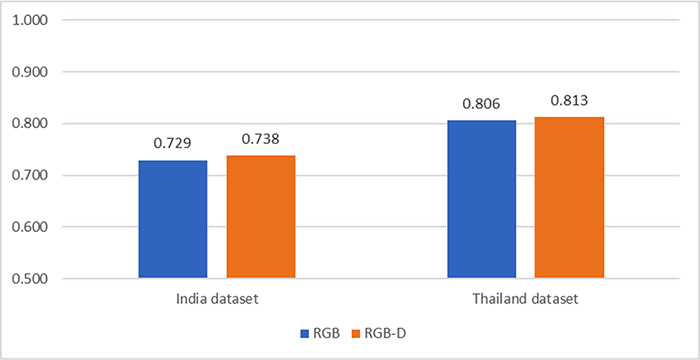

Figure 2: Mean average precision (mAP) for the two datasets

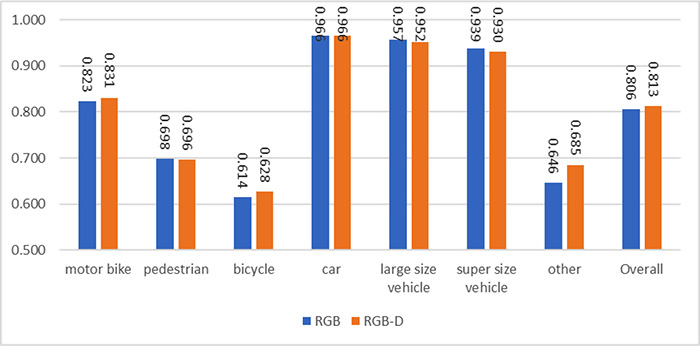

Figure 3: mAP for each vehicle category in Thailand dataset

We tested our approach on two datasets that we collected in India and Thailand. The experimental results (Figure 2 and Figure 3) show that our RGB-D approach outperform the traditional RGB approach in most vehicle categories, particularly for small size vehicles (e.g., motor bike, bicycle). This is because small vehicles are more likely to be missed with poor lighting condition than large size vehicles.

We are planning to conduct more experiments to investigate the relationship between vehicle size and detection performance.

Quite often when we apply the cutting-edge AI technologies to the real-world, challenges arise due to the complexity of the real-world environment, such as the poor lighting condition in our case. We believe that these real-world challenges implicitly state the customer needs. By tackling these challenges with our innovative ideas, we are aiming to provide valuable solutions to our customer and society.

For more details on our research, we encourage you to read our paper, “Classification of different vehicles in traffic using RGB and Depth images: A Fast RCNN Approach,” which can be accessed at https://ieeexplore.ieee.org/document/9010357.