5 February 2021

Shibashish Sen

R&D Centre, Hitachi India Pvt. Ltd.

With advances in computer vision, face recognition has become a prominent technique for personal authentication and identification in many situations. Due to the way usual face recognition algorithms work, however, it is still very difficult however to achieve a high level of accuracy in real-world situations despite these advances. For example, in factories and industrial environments where face recognition may be used to monitor safety and identify workers, the faces of the workers may be occluded (blocked or covered) by helmets, safety masks, goggles etc. which are typical safety gear in such environments, resulting in a drop of performance due to misclassification of faces.

In this blog, we would like to outline the approach we are proposing to improve the face recognition algorithm to correctly classify faces even with the presence of occlusions.

In a usual face recognition algorithm, the input to the neural network is a face image and the Convolutional Neural Network (CNN) is used to extract features which are used to describe the face, which are called face features. In this blog post, we extract face features using CNNs that are pretrained (built) for learning such discriminative face features. The output of the neural network, in this case face features, is the identifier of a person’s face, such that if you pass in images of the same person, the output will be the similar, whereas if you compare the output of images of different people, it will be very different.

The reason why occluded face images show a drop in face recognition performance is due to the nature of features of occluded face images. The features of such images when compared to non-occluded faces, shows a non-isotropic (not uniform with respect to direction) nature when projected in feature space. This results in dissimilarity between face features when compared to a non-occluded face image. This causes misclassification of the test faces leading to a drop in the recognition performance.

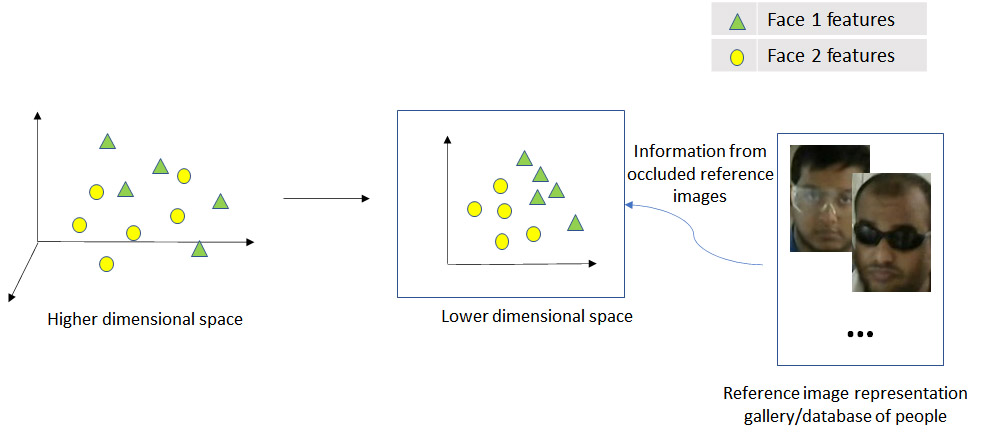

Figure 1: RCLP approach projecting outputs from higher to lower dimension space conditioned on occluded reference images. The axes here represent the dimensions of the feature space, in this case 3 and 2.

Our idea is a simple adaptation technique to recognize such occluded face images by solving the problem of non-isotropic features. For this we propose a solution based on projecting the face features into a lower-dimensional feature space as shown in Figure 1. As can be seen in Figure 1, in the higher dimensional space, the outputs of the different faces are not well separated, which does not allow us to clearly distinguish the two apart. When these are projected to a lower dimension, it helps us remove noisy dimensions which makes the outputs isotropic and more clearly distinguishable.

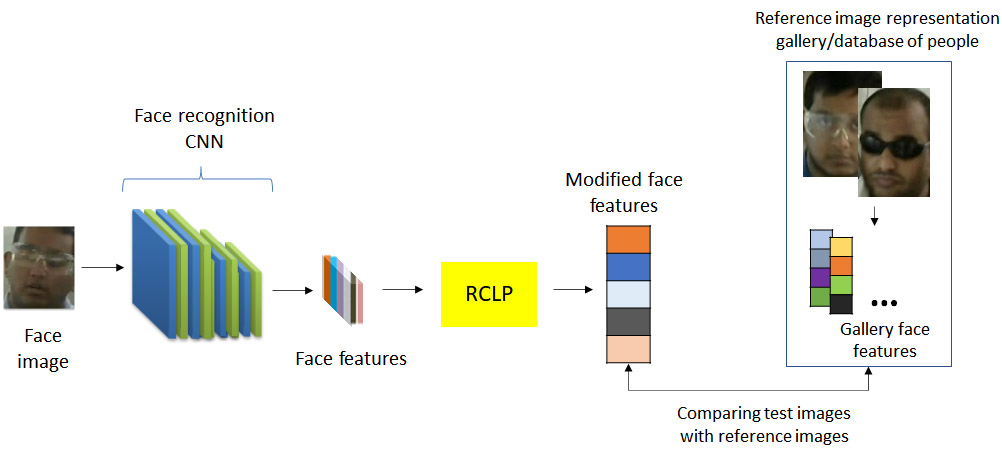

Figure 2: Algorithm of proposed approach with RCLP approach improving the outputs for occluded face images

The algorithm of our approach is represented in Figure 2.

In our RCLP approach, the CNN extracts face features from the input image which is then projected to a lower dimensional feature space. This feature space is conditioned on goggled face reference images, which means that information from the reference images (which are goggled face images) is used for better alignment of features in this lower dimension. Such a projection of features allows us to get better separated representations while also taking into consideration occluded faces. This in turn reduces the chances of misclassification when this transformed output is then used for matching with the reference images in the gallery to identify the face labels.

We carried out experiments using the RCLP algorithm and the results are presented in Table 1. We tested our proposed approach on an internal goggled dataset and external open datasets [1-3] augmented with goggles. The experiments were conducted on two of the most popular face recognition models VGGFace [4] (widely used) and ArcFace [5] (current state of the art), which use different CNN architectures. Figure 3 shows example images from the internal dataset.

Figure 3: Example images from the internal goggled dataset

Table 1: Results (Rank-1 Accuracy %) of experiments using reference conditioned low rank projections with synthetically occluded reference images of public datasets

| Dataset | VGGFace | ResNet ArcFace |

|---|---|---|

| ATT | 86.31 | 99.67 |

| Essex | 92.00 | 98.72 |

| FEI | 79.49 | 99.38 |

| GT | 85.47 | 99.85 |

| CFP | 34.1 | 87.26 |

| LFW | 23.34 | 88.14 |

| Internal | 84.5 | 99.28 |

Compared to the typical algorithm, we obtained improved results for all the datasets on at least one of the two pretrained face recognition models. Overall, we obtained an average improvement of 0.77% and maximum improvement of 4.25% on FEI dataset.

By improving face recognition technology, we hope to contribute to higher performance worker identification and safety monitoring applications to raise worker health and safety in environments where the required safety equipment results in occluded images. In our work, we addressed the issue of non-isotropic face features of occluded face images by projecting the face features into a lower dimensional subspace which is conditioned on goggled face images. We found that the projection of the features to a lower dimensional space makes the embeddings isotropic even with small number of goggled samples. We believe that there is still room for face features to be further improved as we observed a large accuracy gap existing between VGGFace and ArcFace, and plan to explore solutions to combine a re-ranking approach and low rank projection. For more details, we encourage you to read our paper which was presented at the IEEE IAPR.[6]

*If you would like to find out more about activities at Hitachi India Research & Development, please visit our website.