30 March 2021

Anthony Emeka Ohazulike

Hitachi Europe Research and Development Centre, Hitachi Europe, Ltd.

Autonomous driving (AD) cars are no longer mere technology hype. They are here with us and their adoption will continue to grow. Tesla and a few other car manufacturers are leading the aggressive adoption of AD in the urban area. Notwithstanding the fact that they are increasing in popularity, there has been a lot of safety concerns around AD that has led some leading organisations like Google and Uber to abandon their internal autonomous driving projects. While it is natural that we all want AD cars to be very safe, have you ever wondered how you would want your self-driving car to drive? Ideally, everyone would say, “like me!” or perhaps only people who consider themselves “good drivers” would say “let it drive like me” but I imagine that most people who drive would consider themselves a “good driver.”

Setting aside the question of whether one is a good driver or not, I think however that we can all agree that the art of driving art relies a lot on the eye of the driver. Though we might not all agree on who is a “good driver,” as this is not really measurable, it is possible for us to agree on a definition of safe driving. So, all AD developers are focusing on how to make AD cars safe. This is, however, a very challenging task.

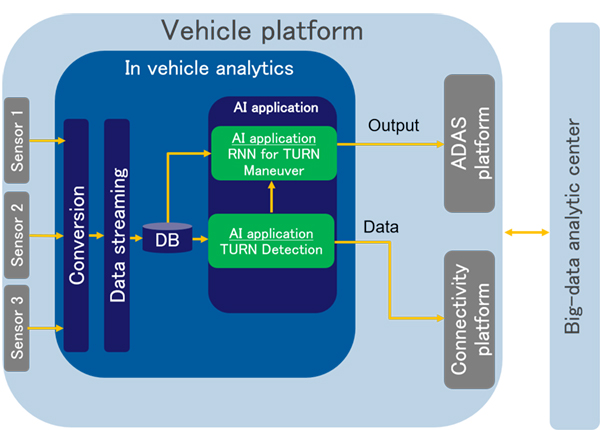

So, let us say that I am a very safe driver. How can engineers make my AD car drive as if I am driving? To gain insights into the answer to this question, we started working on very practical use cases. Different drivers were asked to drive along the same route repeatedly. We then analysed the data to understand what are the common driving patterns and what are those peculiar to that driver. Figure 1 shows the overall architecture of our analytics platform.

Figure 1: The analytic platform.

The experiment

The drivers were asked to drive repeatedly along a fixed route with many left and right turns. We then analysed their driving behaviour while they were driving along the straight road as well as when they were making the turns.

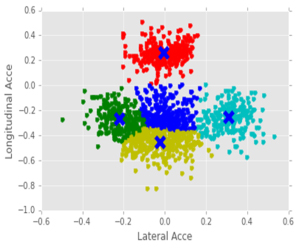

We found that the driving patterns of the five drivers were different (see Figure 2 – K-means cluster). We noticed that the deceleration patterns of drivers differ as they approach the turns, as are the steering wheel angles and positions on the lane. For each driver, these parameters are consistent over the entire drive. The green, yellow, navy and sky blue clusters in Figure 2 show the deceleration pattern as the drivers approach the turns, and lateral acceleration as they take the left or right turns. Having different clusters means that driving behaviour is different for those longitudinal deceleration and lateral movements. The acceleration pattern just after the turn (red dots) showed some similarity in all drivers.

The question then was, how can we teach the car to understand the individual turn manoeuvres performed by the five different drivers?

Figure 2: The K-means clusters of longitudinal and lateral acceleration of 5 drivers just before, during and after the turn events.

Teach the car to drive like me

We therefore trained a machine learning algorithm (ML) or if you like an artificial intelligent (AI) model, to understand how each driver performs the turn manoeuvres. The model is based on Long-Short-Term-Memory (LSTM) principle, where the model tends to remember part of previous events and uses that to estimate the next series of events. To check if our trained models have learnt the turn manoeuvres of the drivers, we asked the model to predict future events; whether the driver will turn left or right or go straight.

Applause and standing ovation to AI!!

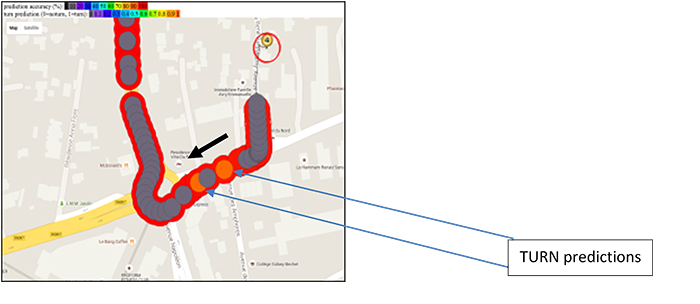

It was astonishing to see how well the AI learnt each driver’s behaviour and was able to predict the turns before they happen (interested readers, please read our full paper for more details). This means that AI algorithms can provide the driver’s preferred driving parameters to the control system of the car ahead of the planned route in real time, so that the AD car can drive just as the driver would do naturally. During the early development stages, we installed the first trained model in a colleague’s car, and then they left to go home after work. Note that this track/path was not included in any way as part of the training data, so it was the first time the algorithm was applied to this path. The tracking (GNNS) and turn prediction data were transferred to a screen in my laboratory in real time, and my remaining colleagues and I sat and watched the algorithm do its work. As the car approached the first turn, BOOM! the algorithm correctly predicted the turn at exactly 3.5 seconds before the car turned right (see Figure 3), the lab exploded with applause and standing ovation for the AI, and everyone was excited.

One by one, the AI predicted the turns as the car approached my colleague’s home, and each right prediction was followed by an applause in the lab. The accuracy of prediction was as expected. Every wrong prediction was immediately fed back into the learning machine to update the prediction model on the fly, hence improving the model, and making it more robust to adapt to changing driving behaviours.

Figure 3: TURN prediction of a colleague as he drives home.

What did we learn?

We assume that the majority of drivers are more comfortable with an AD car if the AD car drives as they would do naturally. Our experiments demonstrated that it might be difficult to have a single AD car model that captures the driving style of all drivers. To address this, we discussed how we could train an AI to learn and predict and adapt to selected human driving patterns.

Our work showed the potential of having an AD car drive in a similar way to the driver of the car. Apart from the comfort presented by such a system, human driving patterns or profiles can be ported from one AD car to another, and as can be inferred, porting a driving profile to a rented AD car.

The potentials of this technology do not need to wait for fully automated cars to go live. It is already applicable in the advanced driver’s assistance systems (ADAS). For example, as the system predicts a driver’s action, combined with information from side sensors like camera or Lidar, the system can warn the driver of approaching left/right traffic. Another potential application is the automatic preparation of brake systems and vehicle dynamics for an upcoming event/action to be performed by a driver.

As AD cars are inevitable, we will continue to develop supporting technologies and functionalities for AD. The future is here!

For more detailed reading please see our publication here:https://trid.trb.org/view/1494528.