20 July 2021

Manikandan Ravikiran

R&D Centre, Hitachi India Pvt Ltd.

Safety gear such as hardhats, vests, gloves, and goggles play an important role in preventing injury or accidents in work environments. Moreover, monitoring the use of the safety gear improves compliance to safety rules and regulations in turn further preventing unnecessary injury or accidents. Thus, industries are looking at adopting video analytics based automatic safety monitoring solutions.

However, these automatic solutions, still face some challenges upon deploying across varying work environments like foundry, rolling mill, assembly line etc. due to their implicit scene differences. Among these challenges (i) correctly identifying specific safety gear, and (ii) continuously identifying the safety gear in all the images in a streaming video, i.e., flip-flopping is notable. Reducing these errors is vital in preventing serious accidents and potentially improving such solutions’ usability.

In this blog, we present how we approached these challenges by developing and validating an end-to-end deep learning solution for industrial safety gear monitoring.

As mentioned above, the conditions in a work environment can vary quite a bit. To cater for such diverse work environments, we developed a solution using (a) improved deep learning models and (b) Re-ID conditioning frameworks each of which are as presented below. Together these modifications reduce the mentioned errors by 4% under limited lighting conditions and 3% under varying postures of workers.

Typically, in an industrial environment, workers wear safety gears as per the need of each working zone. In some zones, they may need to wear safety jackets and in others the safety jacket may not be mandatory.

Existing visual solutions tackle this first by localizing an area where probably the worker with safety gears is present and then classifying if a safety gear is indeed worn by the worker. Typically, the latter part of prediction is done by assigning a ‘score’, where a score greater than 0.5 suggests that the worker is indeed wearing the safety gear.

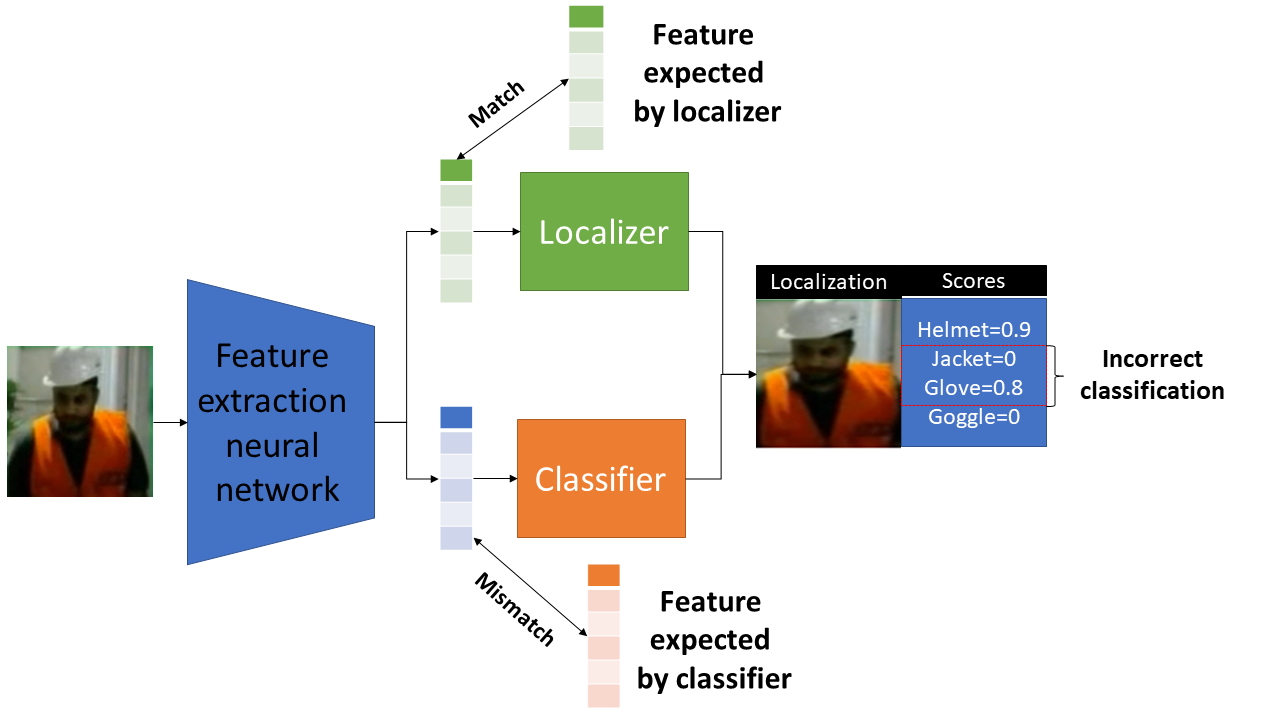

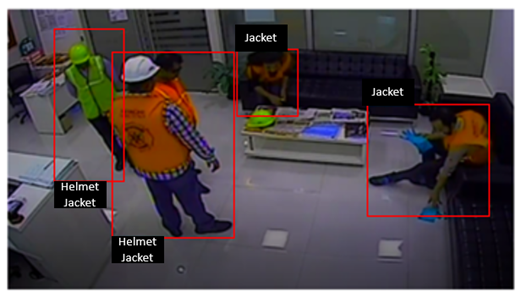

Figure 1: Conflicting features and issue of incorrect scoring during classification of safety gears.

This can however be problematic as the model used in these monitoring solutions suffer from a problem of wrongly assigning small score where it is not confident or vice versa (Figure 1). This typically happens because of the conflicting image features used for localization and classification of worker safety gears in the models used.

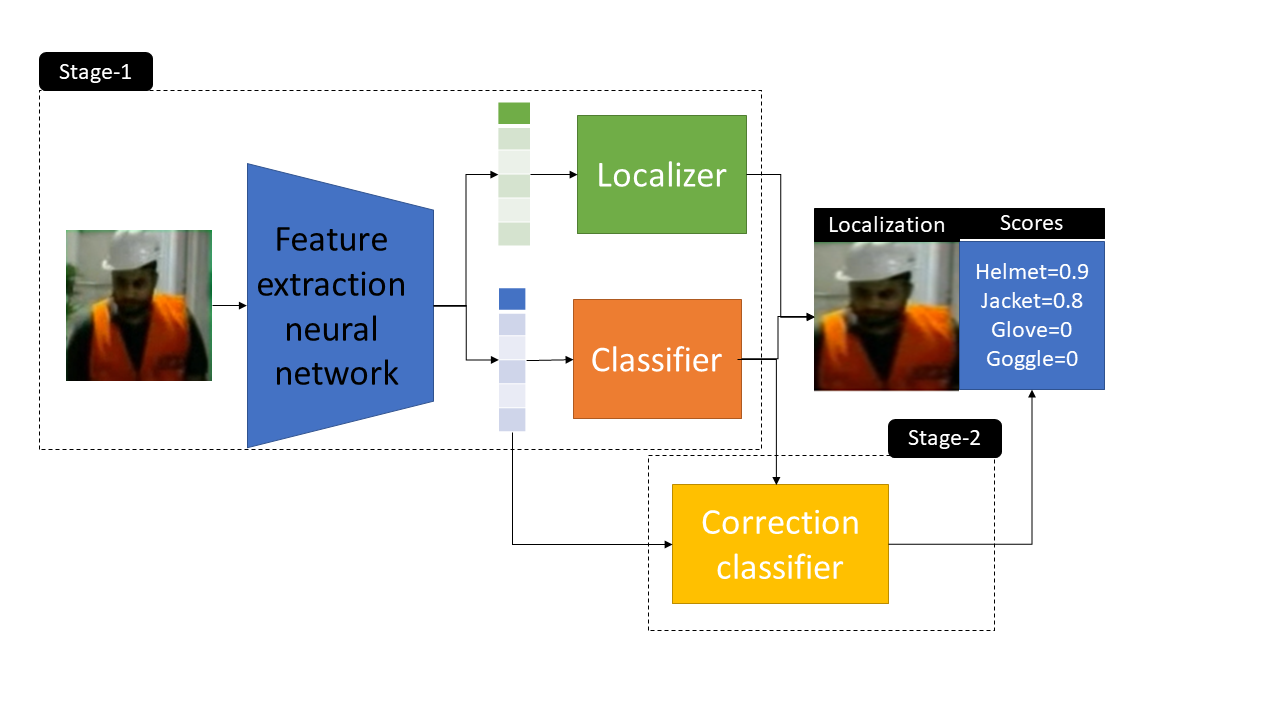

To solve this problem of conflicting features, we improve deep learning model training by using multistage decoupled classification refinement (Figure 2) where we first train the Stage-1 model, which localizes the worker with low ‘scores.’ From this, we use localized safety gears with low scores and trained a correction classifier in the Stage-2 model to improve correct scores and decreases the wrong ones thus reducing overall error.

Figure 2: Multistage Decoupled Classification Refinement (MDCR) for localization and classification.

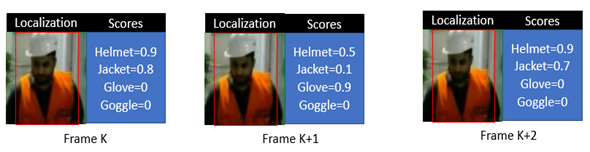

Figure 3: Example depicts Flip Flopping of identification where the model used in solution is failing to identify safety gears correctly in continuous frames despite being very similar.

As mentioned, previously, “flip-flopping” is a common issue in video analytics-based monitoring solutions, where in a continuous video, the system correctly localizes and classifies safety gears in one frame and fails in the next frame etc. (Figure 3). This happens especially because of varying environmental conditions such as lighting, posture, camera position etc. which affect the features used by the algorithms. We solved this problem by using a combination of identifying safety gears in continuous frames using re-identification strategy and merging them consecutively.

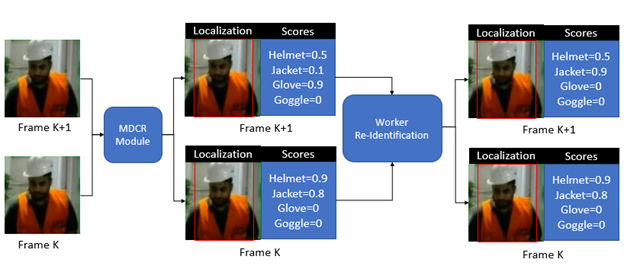

Figure 4: Reducing flip flopping with Re-ID conditioned Sequential Detector.

More specifically, we did this in three stages (Figure 4), first we localized and identified workers with their safety gears in the current frame, and next we find the workers relevant w.r.t previous frame through re-identification [1]. Finally, we merged the results of these re-identified workers to reduce flip-flopping.

We tested our proposed approach by creating a simulated condition inline to industrial environmental conditions with varying illumination and posture (See Figure 5a-5b). Table 1 shows results of our approach under varying illumination and Table 2 shows the results under varying posture.

Figure 5(a): Example images from simulated dataset showing predictions with no light illumination and varying posture of workers with safety gears.

Figure 5(b): Example images from simulated dataset showing predictions with illumination and varying posture of workers with safety gears.

Table 1: Comparison of accuracy (%) of proposed approach with varying illumination.

H: Helmet, J: Jacket, GL: Glove, GO: Goggle

| Approach | Illumination | H (%) | J (%) | GO (%) | GL (%) |

|---|---|---|---|---|---|

| Without MDCR + RCF | Bright | 78 | 72 | 94 | 73 |

| Dark | 72 | 63 | 92 | 73 | |

| With MDCR + RCF | Bright | 82 | 82 | 91 | 77 |

| Dark | 81 | 81 | 90 | 77 |

Table 2: Comparison of accuracy (%) of proposed approach with varying posture.

H: Helmet, J: Jacket, GL: Glove, GO: Goggle

| Approach | Posture | H (%) | J (%) | GO (%) | GL (%) |

|---|---|---|---|---|---|

| Without MDCR + RCF | Stangind | 80 | 67 | 89 | 79 |

| Bending | 78 | 67 | 88 | 79 | |

| Sitting | 78 | 67 | 88 | 79 | |

| With MDCR + RCF | Standing | 80 | 82 | 87 | 78 |

| Bending | 79 | 81 | 87 | 78 | |

| Sitting | 79 | 81 | 87 | 78 |

To overcome the issues of (a) incorrectly identifying safety gears due to conflicting features and (b) flip-flopping both due to varying illumination and postures that are common in industrial work environments, my colleagues and I looked at how we could develop and validate an end-to-end deep learning solution for industrial safety gear monitoring. The solution we developed employs techniques such as (a) improved deep learning models and (b) Re-ID conditioning frameworks which reduced the identification errors mentioned above by 4% under varying limited lighting conditions and 3% with varying postures of workers. We hope that our approach will help ensure correct usage of safety wear and thereby contribute to preventing accidents and injuries in the work environment. For more details of our work, please refer to our paper [2] which was presented at the 2019 IEEE Applied Imagery Pattern Recognition Workshop.

*If you would like to find out more about activities at Hitachi India Research & Development, please visit our website.