17 September 2021

Yuichi Sakurai

Research & Development Group, Hitachi, Ltd.

With many developed nations facing an aging society, the manufacturing industry is facing a workforce shortage. The situation has been further aggravated by the current corona virus pandemic. Thus, the demand for maximizing efficiency and reducing loss cost has become an even more pressing issue to ensure the availability of supply and maintain business continuity. To ensure the resilience of production lines and compliance with product quality, reliable execution of tasks is required. To address these issues, we developed an advanced “real-time work deviation detection” for human tasks utilizing deep learning technology. This blog is an overview of the work conducted by colleagues and I. To find out more detail, I invite you to read the original paper, "Human Work Support Technology Utilizing Sensor Data," which was presented at the 2020 IEEE 7th International Conference on Industrial Engineering and Applications (ICIEA)[1].

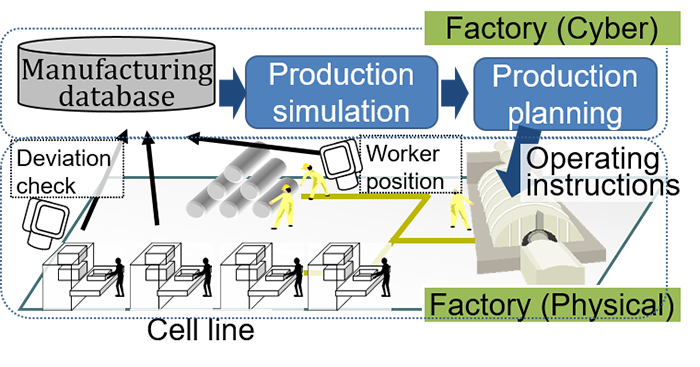

In recent years, a game-changing form of manufacturing called smart factory which employs the Internet-of-Things (IoT), is being increasingly employed worldwide. We are currently studying a next-generation production system (Figure 1) that incorporates the cyber-physical system (CPS [2]) concept to enable sophisticated production management through collection of on-site dynamics and cyberspace production simulation. A new human-machine symbiosis called “Multiverse Mediation” has also been proposed to cope with the diversification of humans and machines [3][4].

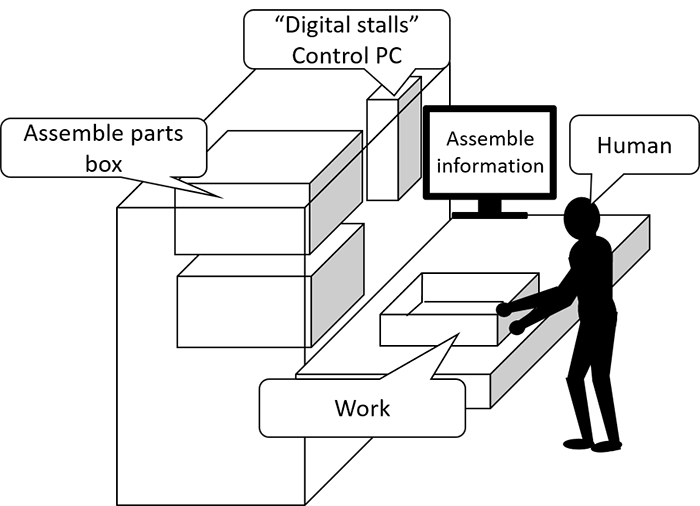

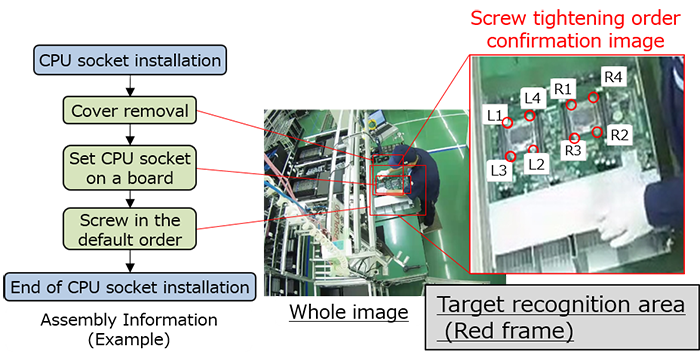

To achieve next-generation production systems and Multiverse Mediation with CPSs, 4M (huMan, Machine, Material, and Method) work transitions need to be clarified and used more accurately. However, traditional systems cannot detect deviations in manual procedures. To resolve these issues, we are developing a highly accurate detection technology for “human work”. Figure 2 shows the assembly cells considered in this study.

Figure 1. Next-generation manufacturing system

Figure 2. Assembly cell

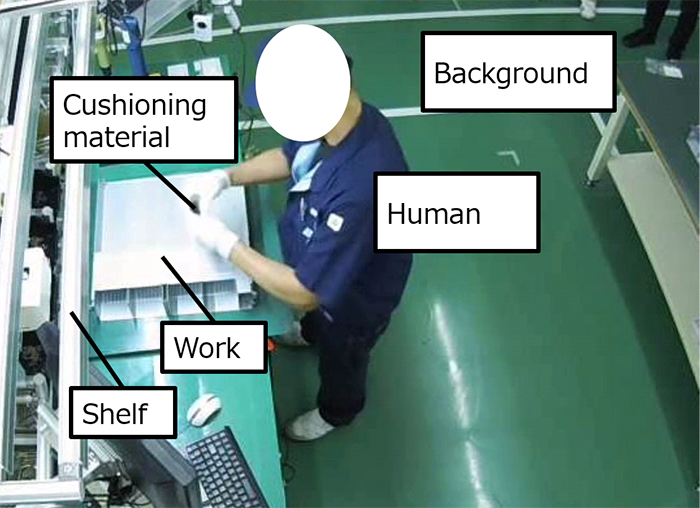

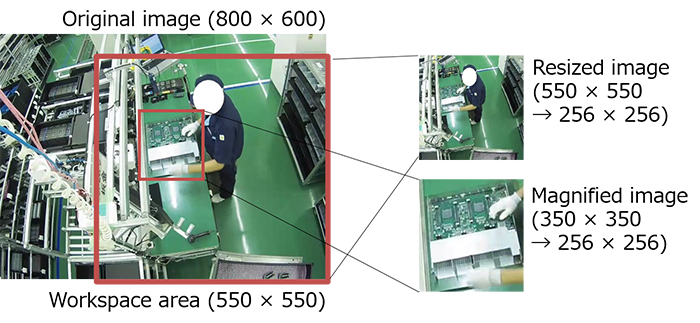

The input images obtained from the camera installed on the top of a worker used in this study were in the range where all the process operations can be seen (Figure 3). It is not always necessary, however, to have access to the entire range of images for work deviation detection. Focusing on this point, we proposed a state estimation method using CNN (convolutional neural network) with a variable image clipping range as a human task state estimation method (Figure 4).

Figure 3. Input image

Figure 4. Proposed method

We also developed an interlocking system using composition diagrams of the electronic equipment based on prior knowledge related to production. For example, to identify additional tightening of screws, we enlarged not only the vicinity of the hand but also focused on the vicinity of the target assembly parts. The point being that the target position to be enlarged cannot be determined unless it is clear in advance where the feature of the work will appear on the cell in each process. As a result, the effects of differences in the physique and posture of a worker are reduced, and highly accurate manual work detection can be achieved. Figure 5 shows the link between the electronic assembly diagram and the cropping variable image.

Figure 5. Linking the proposed method with a drawing

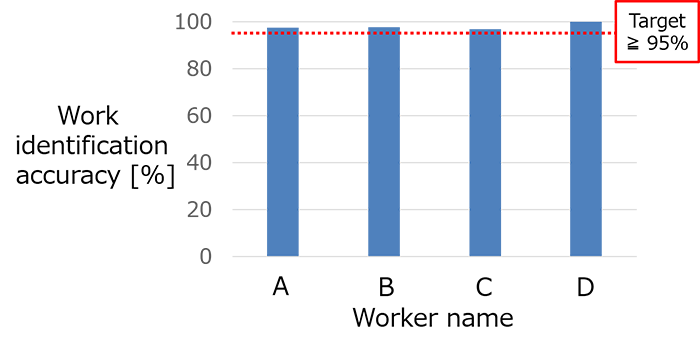

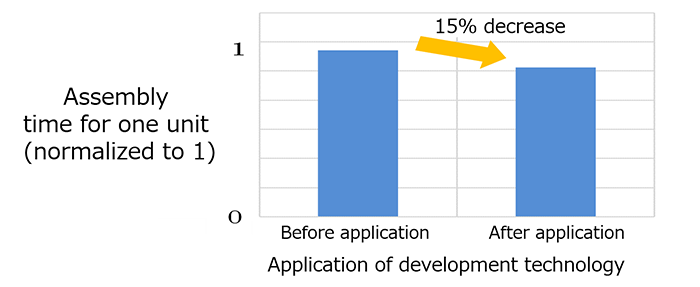

Based on the technology described above, we developed a human work support system. We then applied our prototype system to a mass production line for IT equipment and evaluated its performance. Figure 6 shows the task identification accuracy for four workers, and Figure 7 shows the measurement results of the work time.

Figure 6. Recognition accuracy results by worker

Figure 7. Assembly time results

Compared to conventional approaches, we achieved a 15% reduction in product assembly time and a deviation detection leak of almost zero (more than 95% work identification accuracy). These results demonstrated the potential for our system to efficiently and effectively support manufacturing workers and contribute to greater efficiency and quality management in the assembly of complex equipment.