15 December 2021

Atsuki Yamaguchi

Research & Development Group, Hitachi, Ltd.

Gaku Morio

Research & Development Group, Hitachi, Ltd.

Hiroaki Ozaki

Research & Development Group, Hitachi, Ltd.

Ken-ichi Yokote

Research & Development Group, Hitachi, Ltd.

The outbreak of COVID-19 has forced us to change the way we do things at home and at work. This is no more evident than in the increased need for automation and the use of digital online services. As we slowly accustom ourselves to the new “normal,” peripheral features that were “nice to have” are becoming “would be really useful to have.” In this blog, we would like to introduce the work we are doing in natural language processing, aiming for providing such a “handy” feature.

For many of our readers, the sight of a schedule filled with online meetings and conferences is now a familiar one. As the need for smooth running of online business activities continue to surge, we are looking at how we can apply our technology to meet the need. One area identified is the automatic generation of meeting minutes.

Using state-of-the-art natural language processing (NLP) techniques, we have developed an AI-driven automatic meeting minutes generation framework that automatically generates minutes from transcribed speech recordings in meetings. The framework was submitted for external evaluation at the First Shared Task on Automatic Minuting at Interspeech 2021 (AutoMin 2021) [1] and was able to demonstrate high performance in various human evaluation metrics, meaning it can generate a clear and comprehensive minutes. Below, we would like to share an overview of our paper [2] presented at AutoMin 2021. For more technical details, we invite you to read the original paper.

Several technical challenges exist in automatically generating meeting minutes from speech data in a meeting. Firstly, meeting minutes can have various formats, which very often differ by writer, even if they are summarizing the contents of the same meeting. This makes it difficult to employ a machine learning (ML)-based approach because we need samples with a consistent format to effectively train an ML model. Secondly, meeting recordings transcribe what is spoken so they are written in first-person and in spoken language, whereas the corresponding minutes are usually re-written in the third-person and in written language. Therefore, we need a mechanism to fill the gaps between the two. Thirdly, meeting minutes are generally well-organized (e.g., itemized), suggesting that we must not only summarize noisy speech recordings but also reorganize the summary in a well-structured and coherent way to capture the key points and have an intelligible record of the meeting.

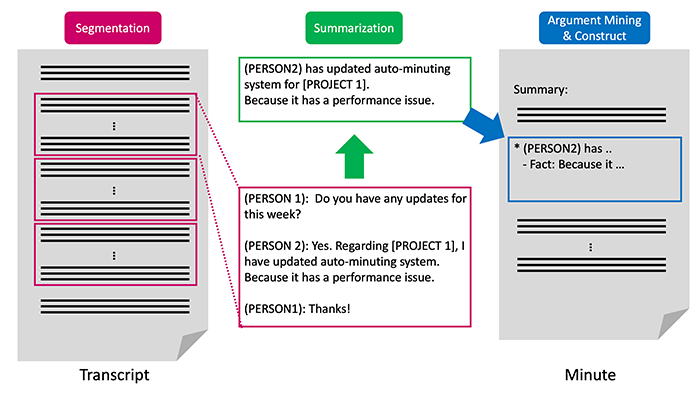

To address the challenges mentioned above, we proposed an automatic minute generation framework that consists of segmentation, summarization, and argument mining modules. Figure 1 visualizes the entire process. Usually, we need reference summaries to train a neural-network-based summarization model but one major advantage of our approach is that we do not need reference minutes to automatically generate summaries from transcripts. We utilized a pre-trained language model(1) (BART) [3] with a focus on chat dialogue summarization, allowing us to generate concise summaries written from a third-person perspective and in written language without any additional training.

Figure 1. Overview of our approach

The segmentation module splits an input transcript into topic-specific blocks, which enables us to generate a summary per topic. As even state-of-the-art neural-network-based summarization models, including BART, are not yet able to process a long transcript at once, it needs to be split into some segments so that they can be accommodated by the models. This process is particularly vital in automatic meeting minute generation as most meetings are usually 30 minutes or more. The segmentation module also utilizes a pre-trained language model, specifically Longformer [4], yielding accurate segmentation results with a small amount of training data.

The argument mining module formulates a minute from topic-specific summarized texts, while reorganizing them so that they are consistent in structure. To compose such structures in a meeting minute, we first applied our argument mining(2) parser [5] which predicts an argumentative label for each sentence and the relationship between sentences. We then structured the minutes using predicted labels and relations in accordance with pre-defined formatting rules.

The generated minutes were evaluated based on the following three metrics: adequacy, grammatical correctness, and fluency. Each metric was assessed by two annotators and is based on a Likert Scale of 1 to 5, where 5 is the best result. Adequacy denotes whether a minute adequately captures the major topics discussed in the meeting, and grammatical correctness looks at the grammatically consistency of the minutes. Fluency measures whether a minute consists of fluent, coherent, and easy-to-read texts.

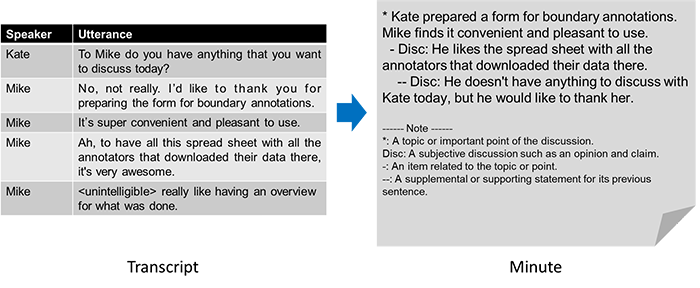

Table 1 presents the average performance of our framework across 28 test set samples. As you can see from the table, it achieved around four out of five for all metrics which gave us the confidence that we can automatically generate minutes of reasonable quality. Figure 2 is a portion of the minutes generated with our technology and the corresponding source transcript, and shows how the automatically generated minutes are well-structured and intelligible to those involved.

Table 1. Results of a manual evaluation by two annotators

| Adequacy | Grammatical correctness | Fluency | |

|---|---|---|---|

| Hitachi | 4.25 | 4.34 | 3.93 |

Figure 2. Example of our generated minute and its corresponding meeting transcript

In this blog, we introduced an automatic meeting minute generation framework that can formulate easy-to-read meeting minutes with little training data required compared to conventional summarization models. Going forward, we will actively take steps to help accelerate business operations using this framework and other various Hitachi’s AI technologies.