26 January 2022

Shunsuke Minusa

Research & Development Group, Hitachi, Ltd.

Takeshi Tanaka

Research & Development Group, Hitachi, Ltd.

Hiroyuki Kuriyama

Research & Development Group, Hitachi, Ltd.

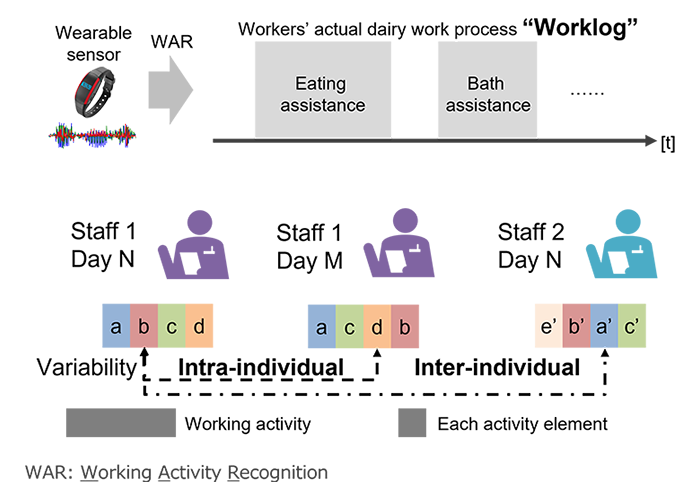

The widespread use of wearable sensors in recent years has enabled activity recognition (AR) to be studied in depth. Sensor data such as those from accelerometers is used in AR to process movement and recognize patterns, and has been successfully applied to the recognition of simple activities with characteristic motion, such as walking [1] or hand movement. As part of our goal to use AR to increase worker safety and security, and raise productivity and resilience, we are looking at how movement can be processed to recognize more complex activities. To recognize movement patterns in more complex activities such as working, we need AR of mid-working activities (e.g., eating assistance in caregivers) that will enable us to create and understand “worklogs” showing what is being done and how much time is being spent on that activity in a given work shift (Figure 1). However, despite the demand, working activity recognition (WAR) still faces numerous challenges particularly in the areas where big difference may occur between planned and actual works, such as in caregiving, construction, and so on.

Figure 1. Example of dairy work process “Worklog” and diversity in working activities

The primary challenges in WAR are (i) ambiguity and (ii) diversity (Figure 1). Compared to simple activities, each working activity includes several activity elements, and their definitions are ambiguous. Therefore, it is difficult to apply conventional AR methods where experts create specific hand-crafted features to help identify each activity [2]. In addition, the order and the characteristics of the activity elements in working activities differs greatly from person to person, as well as in the individual themselves. Thus, even if large ground-truth datasets are collected, it is still not easy to achieve sufficient recognition of working activity.

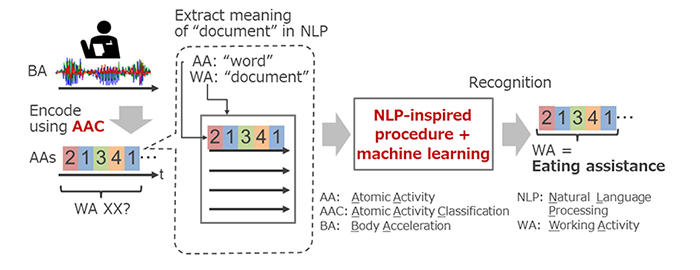

To overcome these challenges, we proposed a novel WAR method that introduces an analogy from natural language processing (NLP) [3]. NLP is able to capture the meaning an entire document from a combination of words. In our NLP-inspired model, we assumed that working activities can be considered as composed of activity elements (atomic activities) similar to that of the relationship between documents and words in NLP [4]. The proposed method consists mainly of atomic activity classification (AAC) - an unsupervised encoding of the activity elements and NLP-inspired recognition.

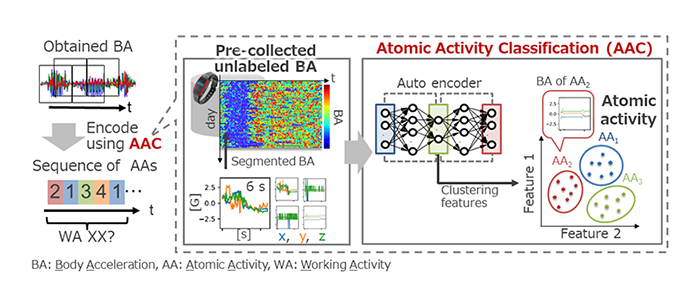

AAC encoded sensor data to a sequence of atomic activities using deep clustering [5] that simultaneously performed feature extraction and clustering (Figure 2). NLP-inspired recognition recognized working activity (e.g., eating assistance) using a topic model in NLP and machine learning method (Figure 3) from the atomic activities sequences. The proposed method diminishes the impacts of ambiguity and diversity in working activities without expert-driven feature extraction and large ground-truth dataset.

Figure 2. Overview of the encode of sensor data to atomic activities (AAs)

using atomic activity classification (AAC)

Figure 3. Overview of the recognition of working activities (WAs)

from the sequence of atomic activities (AAs)

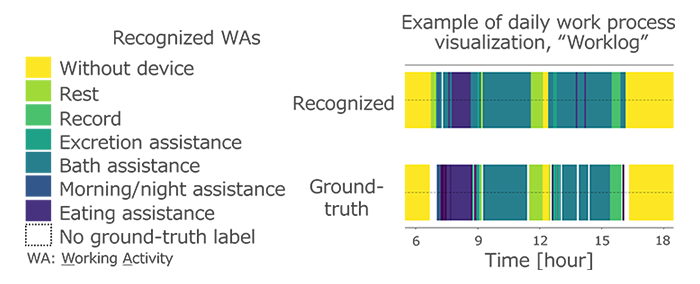

We assessed our method using a caregiving work dataset obtained from actual caregiving workers [3]. The proposed method showed equivalent or better recognition accuracy than the conventional method using hand-crafted feature extraction [2]. The results also showed that the proposed method generated worklogs which could recognize overview of daily work process (average accuracy of 71.2%) (Figure 4).

Figure 4. Example of visualized daily work process “Worklog” in some caregiving staff

Applying our proposed method, we showed that it is possible to recognize working activities and create a worklog from a relatively small amount of ground-truth data, which can be measured in actual facilities, without relying on the hand-crafted feature extraction by experts. The research indicates that the novel method proposed has the potential to contribute to future WAR implementation in actual working fields. For more details, we encourage you to read our paper [3].