20 October 2021

Kaichiro Nishi

Research & Development Group, Hitachi, Ltd.

The automation of work is progressing in factories and distribution warehouses due to labor shortages stemming from a declining birthrate and aging population. One way to automate work is to use robots. However, the main problem with using robots is that setting each one up, e.g., adjusting the acceleration for stabilization of operations, is quite time-consuming.

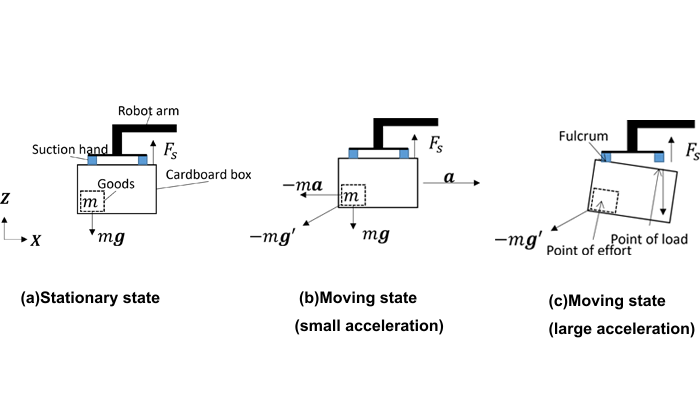

Figure 1 shows a robot generating a force greater than expected on the object being grasped during transport, causing the object to fall from the robot’s suction hand. Objects tend to fall if the goods are packed in a biased manner, if the object to be transported is too heavy, or if the acceleration of the robot is too high, to give a few examples. Therefore, when transporting an unknown object, the robot has to move at low acceleration and under the assumed maximum weight and center of gravity of the object to be transported, which significantly reduces the throughput.

In order to reduce the labor time required for adjustments and improve the throughput, we developed a robot motion-planning method that can control the acceleration in accordance with the state of the object to be transported.

Figure 1: Behavior of object transferred by robot.

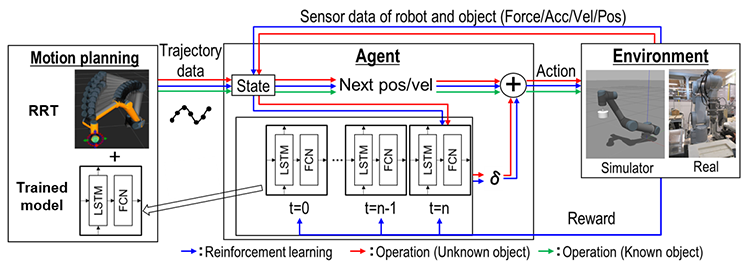

The overview of our motion-planning method is shown in Figure 2. The system controls the robot by modifying its trajectory in real time on the basis of the trajectory data and sensor data generated by the existing motion-planning system. This system is operated in two phases.

Figure 2: Overview of robot motion-planning method.

The first phase is to have the robot learn the motion plan in the simulator, as shown in Figure 3. By learning in the simulator, it is possible to try many different movements without damaging the real robot. Moreover, learning a variety of trajectories has the advantage of making the system robust to changes in the environment and changes in the objects to be transported. In addition, dangerous motions can be learned in advance, thus eliminating the possibility of the robot making these motions when applied to tasks with actual machines.

Figure 3: 6-DoF-arm robot in simulator environment.

The second phase is to have the robot execute the learned operation in a real machine environment. Since the configuration of the robot in the simulator and on the actual machine is the same, the robot can execute the learned behavior on the actual machine without any adjustments.

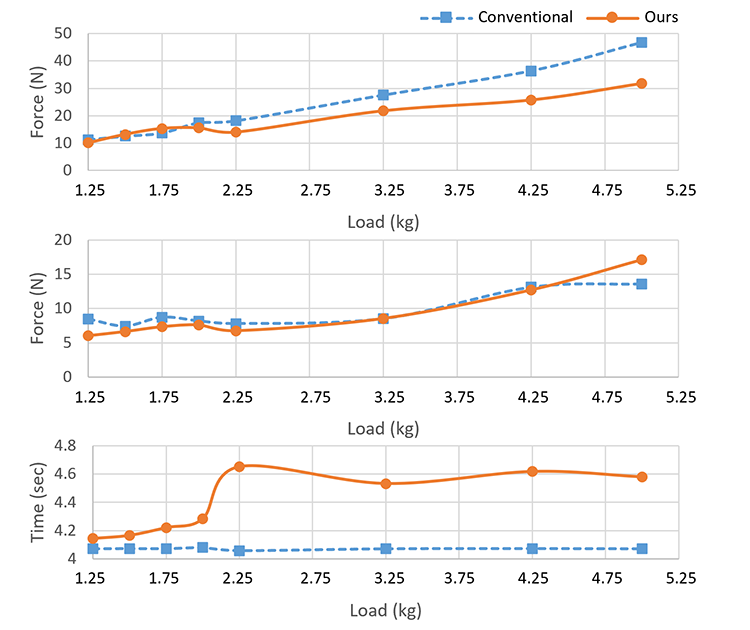

To determine the effectiveness of our method, we compared its performance with that of a conventional method by utilizing an evaluation trajectory that was not used in learning. Figure 4 shows the force generated on the object to be transferred and the transfer time when the transfer operation was executed. We can see here that our method changed its operating behavior after 2.25 kg. This is because it was determined that when the weight of the object to be transported was 2.25 kg or more in the case of the given or evaluation trajectory of the developed method, it was necessary to reduce the acceleration in order to suppress the force applied to the transported object.

Figure 4: Data output from sensors and transport time.

You can check out the actual robot in motion in this YouTube video.

We have developed a motion-planning method for transport work and demonstrated its effectiveness through actual machine verification. In order to further advance the automation of work, it is important to both reduce the labor time required for robot installation adjustments and increase the throughput. In the future, we plan to innovate the automation of operations by developing motion-planning technology for various operations such as assembly and welding.

This work was presented at the 2021 IEEE International Conference on Robotics and Automation (ICRA2021) [1].