- Kazushi YAMASHINA

- Researcher,

Center for Technology Innovation -Controls and Robotics

Research & Development Group, Hitachi, Ltd.

- Hiroshi ITO

- Researcher,

Center for Technology Innovation -Controls and Robotics

Research & Development Group, Hitachi, Ltd.

*Position and affiliations given as current on date of publication

Robots are broadly used in factories and other standardized tasks.

Engineers need to program each and every operation,

which limits robots to a specific application,

because they cannot quickly adapt to the unexpected.

Some junior researchers are addressing this problem

by advancing technologies that combine

deep learning and field-programmable gate arrays (FPGAs)

to build a society where humans and robots coexist.

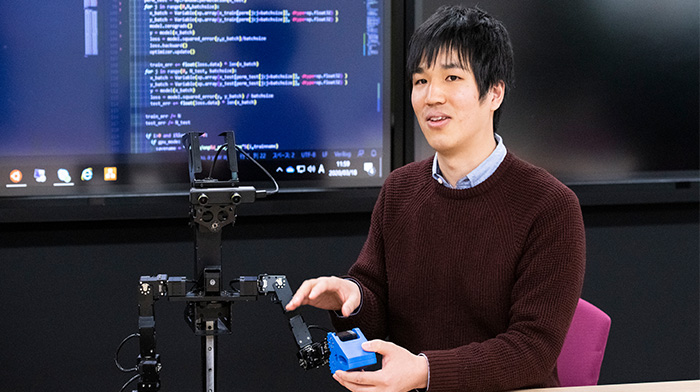

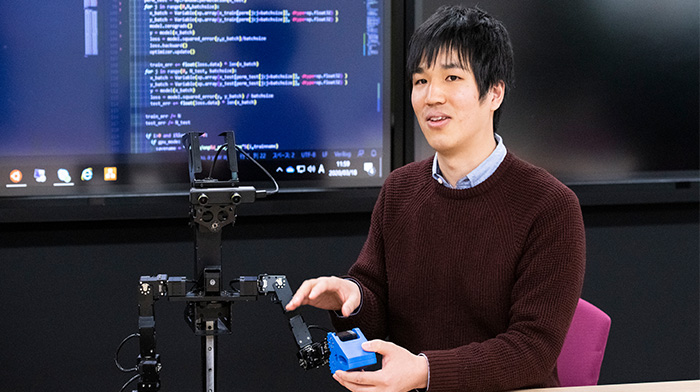

We talked with researcher Hiroshi Ito and Kazushi Yamashina,

two members of the Robotics Research Department of

the Center for Technology Innovation - Mechanical Engineering at Hitachi, Ltd.,

about this technological endeavor.

(September 29, 2021)

More flexible and high-speed robot movement

- Ito

- My research focuses on deep learning—in other words, teaching robots how to move in some way using artificial intelligence (AI). For example, let’s say I want to teach a robot how to pick up a plastic bottle. In a conventional robot, people write programs to have it make all the necessary movements, such as recognizing the position of the plastic bottle using a camera, determining the location, moving into position, and reaching out its arm. My research is developing technologies which can teach a robot how to manipulate its arms and legs by using remote control or by taking its hand and physically teaching it like you would a child. The robot learns how to move in the same way, considering its status using camera and motor data. The robot can then adapt to even small status changes. For instance, my research into smart technologies works to allow a robot to pick up many things like a thermos or candy by simply teaching it to pick up a plastic bottle.

- Yamashina

- Hiroshi is validating the principles using deep learning while creating the fundamental base for movement. On the other hand, I am developing technologies for practical implementation of his work. Operations using deep learning take longer than ordinary operations. I am in charge of building the systems and embedded computers which can accelerate the speed of these operations.

As far as I know, there is a considerable gap between the development of algorithms and practical implementation in robotic research. It’s not unusual for the calculation and execution of a single movement to take more than 10 seconds. My expertise is not in robotics but embedded systems and computer architectures, which is the perspective I take when considering how to reduce the amount of time it takes for these calculations. I believe the purpose of our work is to drive performance enhancements and system integration founded in practical implementation.

Deep learning

to eliminate motion programming

- Ito

- Our team first developed a robot to open doors*1 as part of my research to control an entire robot without any motion programming by using deep learning to facilitate complex movement. This robot had to recognize the door, move into position, reach out its arm, grasp and turn the doorknob, open the door, and then pass through. By teaching tasks using remote control or by directly manipulating the arms and legs, the robot learned how to open doors autonomously.

- Ito

- In the past, robots had been using AI to do things like recognize an object using a camera and detect the position with processes enclosed on a single sensor, but our robot that opens doors takes in and learns from camera images, joint angles, and current values indicating the motor load. This lets it adapt to even small status changes.

We started small in the development of this robot that opens doors. Initially, our capability tests used only a right robot arm and simple USB camera. Because these tests went well, we next built an adult-sized humanoid robot to research a door-opening task. Once the robot successfully completed this task, we built the small robot that we use now.

The most difficult part of the development was linking the various systems. Robot motion involves not only microcomputers, mechanisms, and control software but also AI. The robot won’t move as intended if there is an error in any of these systems: if the motor control doesn’t operate properly, the robot will have jagged movement no matter what commands are sent by the AI. Total integration is by far the hardest part. At first, our robot didn’t move as expected even though the AI was producing the proper results. To find the problem, we had to start all over again linking up one system at a time.

Higher-speed deep learning

to achieve a robot capable of catching falling objects

- Yamashina

- When I was a student, I specialized in researching embedded systems—specifically, FPGA applications and design methods. The head of our department at the time took interest in my specialized field. I also thought I could create something interesting by integrating FPGA and robotic technologies, which is why I became involved in this research project.

- Ito

- After I had built a robot that could open doors, the head of our department at that time thought about what to do next. He decided that he wanted to overcome the time constraints of AI operations and further pursue real-time operations. Kazushi and I began collaborating when he recommended that we work together.

- Yamashina

- We first succeeded in accelerating an inference process to execute results obtained from deep learning using an FPGA. This was done by replacing the portion of the process ordinarily executed by software with specialized computational hardware which could accelerate processing speed. However, there were many examples of this kind of research and it wasn’t really anything particularly unique. Hiroshi and I set our sights on discovering where caution was needed and what type of deep learning method was best for controlling a robot in real time using inference processing functions on FPGAs.

Latency is critical in real-time robot control. Close attention has to be paid to the progression of a process during the time it takes to transmit an electric signal as well as the time it takes to calculate and return the results. When a robot grabs an object, it will crush that object if it squeezes too tightly. This would be the time it takes for the robot to capture images from the camera, calculate the image data, and determine if it is about to crush whatever it is grabbing. This generally takes about several hundred milliseconds. That is roughly a hundred times slower than human reflexes. People know immediately whether or not they are about to break an egg in their hand. We are developing this technology motivated by a passion to achieve this sensitivity for safe and more rapid robot motion in the future.

- Ito

- Kazushi proposed the first prototype for our research, and we discussed things as we built it. The prototype was a robot to see a falling object and immediately reach out and catch it. As a simple test, the first step was to create a robot that could catch a falling object by using a neural network—a mathematical model of a network of artificial neurons, or nodes, connected to one another. However, processing on standard neural networks and computers was too slow and failed to catch a falling object. I gave the robot I had built to Kazushi so he could try and increase its speed. He not only implemented the neural network and other core features on an FPGA, but even accelerated the camera processing and prevented the robot from crushing objects by adding sensors to its fingertips and teaching it to hold softer and harder things. As a result, our first project together realized a robot able to reach out and catch falling objects with different degrees of hardness without crushing them.

- Yamashina

- Catching a falling object is very difficult even for a human being. I think a robot able to do it with more precision than a person would make quite an impact. Even though the operation is simple, this robot can recognize an object and provide feedback for a decision in approximately a millisecond. However, this is a compact robot, providing feedback with only the minimal necessary functions. I want to accomplish the same with more complex control.

Unique robot network

to enable reflex action

- Yamashina

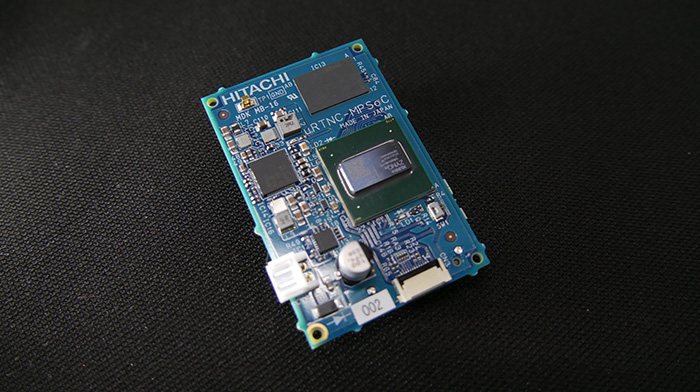

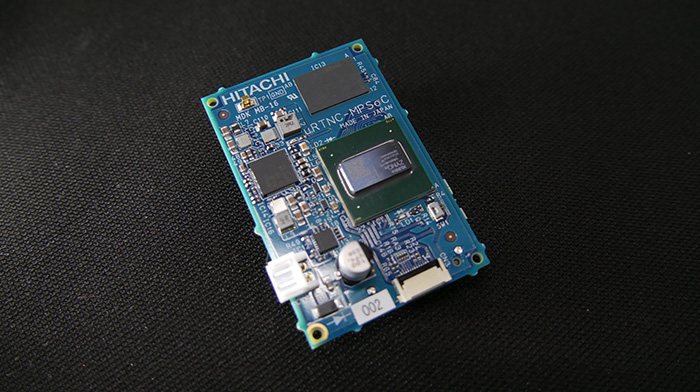

- We had been focused on accelerating the inference process for a falling object, but our next development goal was system optimization. FPGAs are special devices which can only be used by particular engineers. Therefore, we created a system to solve this problem. There are technologies to generate FPGA processes from software. We took advantage of these technologies to initially build a system capable of accelerating inference processes, which makes it easier for others to build FPGAs. In addition, we employed a method known as hardware-software codesign. We used software to embed accelerated hardware and developed a controller incorporating a system that can be operated with an application without ever worrying about the hardware. This control system, called an edge controller, is the size of a person’s palm and can be built into the arm or hand of a robot.

Photograph of an edge controller:

A 40 by 60 mm circuit board equipped with a hybrid FPGA-CPU chip and various interfaces (USB 3.0, etc.)

- Ito

- A robot can move by simply feeding the results obtained using my neural network into the FPGA. This system is very user-friendly.

- Yamashina

- Another thing about the system design is the need to rearrange the configuration when increasing or decreasing the number of devices built into the robot, such as sensors and actuators that convert energy into motion. This is inefficient because the input and output paths increase and decrease as well. It would be better to have more flexible adaptability. That is why our team is trying to employ the network-type system that we have been developing for a while now. Original communication protocols developed for this network have been implemented into the hardware. The network system becomes scalable with a changing number of input and output devices because different devices can be quickly connected to communicate digitally with one another.

The concept for the entire system has achieved high-speed response through a real-time network of an I/O controller, which controls the input and output of motors and sensors, and an edge controller that handles deep learning processes. Above that is a master controller, which controls the entire robot.

The structure of a human neural network gave us the insight that led to separate master and edge controllers. The system hierarchy is divided in the same way people have a cerebrum and peripheral nerve: the edge controller, like a peripheral nerve, operates in areas the master controller—namely, the cerebrum—is not intended to. The reason for this separation is the huge difference in their processing speeds. Image processing executed by the master controller generally takes about 30 to 100 milliseconds. However, reflexive motion control has to process data at much faster speeds. Control of an industrial device would need to operate at 100 microseconds, or 0.1 milliseconds, for example.

- Ito

- In terms of systems, mine is a predictive one. The robot takes into account camera data and its own posture at the present moment to sequentially predict how far to reach out its hand to open the door in the next moment. The robot can predict what to do next but cannot move reactively. If someone bumps into the robot or suddenly gives it something for instance, the robot cannot respond to the interaction or abrupt disturbance. I want to create a machine that moves more like a human by integrating both predictive and reflexive systems. Therein, I work on the predictive systems while Kazushi creates the reflexive ones to generate movement. This integration between predictive and reflexive systems is key to achieving human-like movement, such as a robot not spilling a drop of water in a glass it is given even when suddenly pushed.

- Yamashina

- People tend to wonder how important real-time reflexes are for a robot, but that is only because the potential hasn’t been explored yet. I am sure there are many latent needs yet to be discovered. One example would be a person trying to shake hands with a robot. It’s difficult for a robot to reach out its hand at the same speed as a person finds normal, even when someone reaches out their own hand. These types of situations will most likely cause stress as people begin to really coexist with robots. As a device proficient at input and out, I think FPGAs can solve the problems that we are not even aware of yet.

- Ito

- At present, people are pretty forgiving with things robots do, even if it is a bit strange. In the worlds like we see in comics and movies though, robots in various forms live a normal life alongside mankind. That is the kind of world I want to create.

Robot development requiring

both theory and practice

- Ito

- I entered the department of electronics and control engineering at my college of technology, which is a department teaching both machinery and electricity. I studied for five years primarily learning about machinery, spending most of my time building mechanisms by drawing schematics and operating a lathe, while occasionally touching on the electrical side of things. I learned about how to build robots when I entered the NHK Robocon College of Technology, which is a competition of ideas and a robot contest for college of technology students throughout Japan, organized by the nation’s public broadcaster. I knew I needed to learn about smart robots when I went on to higher education. I liked computers and programming, so I changed my focus and took the plunge into the department of informatics. I’ve loved building robots since I was a young. I feel like I go into the office to play at work every day.

- Yamashina

- I majored in computer science. I started in the field of embedded systems when I joined the laboratory, where I began researching FPGAs. Robots were not in my field of expertise, but from my university days I had released code on GitHub, a software development platform, which Hitachi personnel apparently saw during the interview process. I heard the geekiness of actually writing code is what made me stand out [laughs].

- Ito

- I think college of technology graduates tend to excel in hands-on work. On the other hand, university graduates who have really learned the theory are often better at theorizing.

- Yamashina

- I think this type of research requires both theoretical and practical approaches. Only approaching it theoretically, this type of development would be impossible because no one could do the practical coding, or implementation. My team and I are always writing code, just like I am sure Hiroshi is. This routine task is most of what we do, other than external presentations and participation in academic meetings. We do everything on our own from the circuit board designs to the logic designs and implementation of FPGAs as well as the design and implementation of the software.

- Ito

- The best teams for building robots are made up of multiple people versed in many different fields. The easiest teams to work with share a common base while each person specializes in a different field.

For humans and robots

to coexist

- Ito

- We talk with various people about how to expand our research in the future and the types of products to implement our work. The biggest challenge is quality assurance. When AI is considered a black box, I think the most difficult part is to guarantee operational precision and safety.

- Yamashina

- Robots of course need to be safe for humans and robots to get along, but the way that we digitalize the sensations of humans in robots and share that information as data is also important. I think it would be fantastic if we could digitalize and implement something similar to the five human senses in robots using embedded systems and FPGA technologies.

- Ito

- The endeavor to build a robot is just like trying to create a human. The head of a joint research project who helped me a lot when I was a university student told us we needed to create a brain to learn about the brain. To build a robot capable of doing something using deep learning, we need to understand what’s happening in the brain and how thought works. I hope to someday build a human-like robot.